Continuous Batch in Orca

Everyone talks about continuous batch with Anyscale blog, but the original orca paper and talk gives some extra details

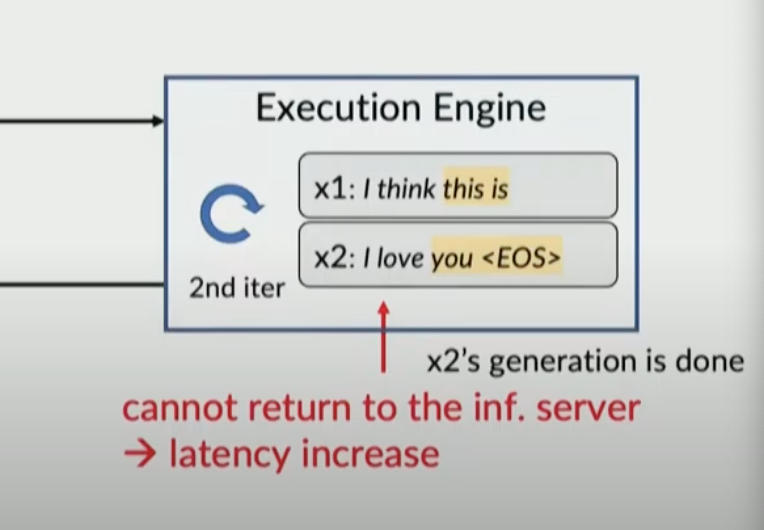

1 Request-level scheduling

Latency increase when there is finished request can not return due to other long output requests.

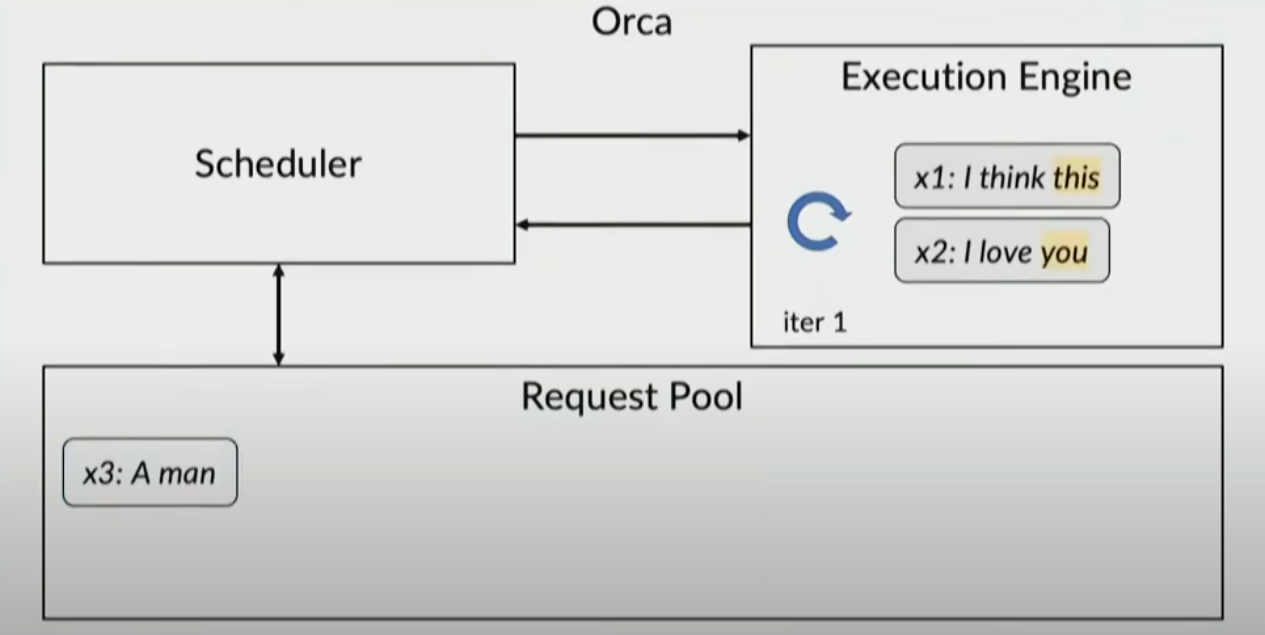

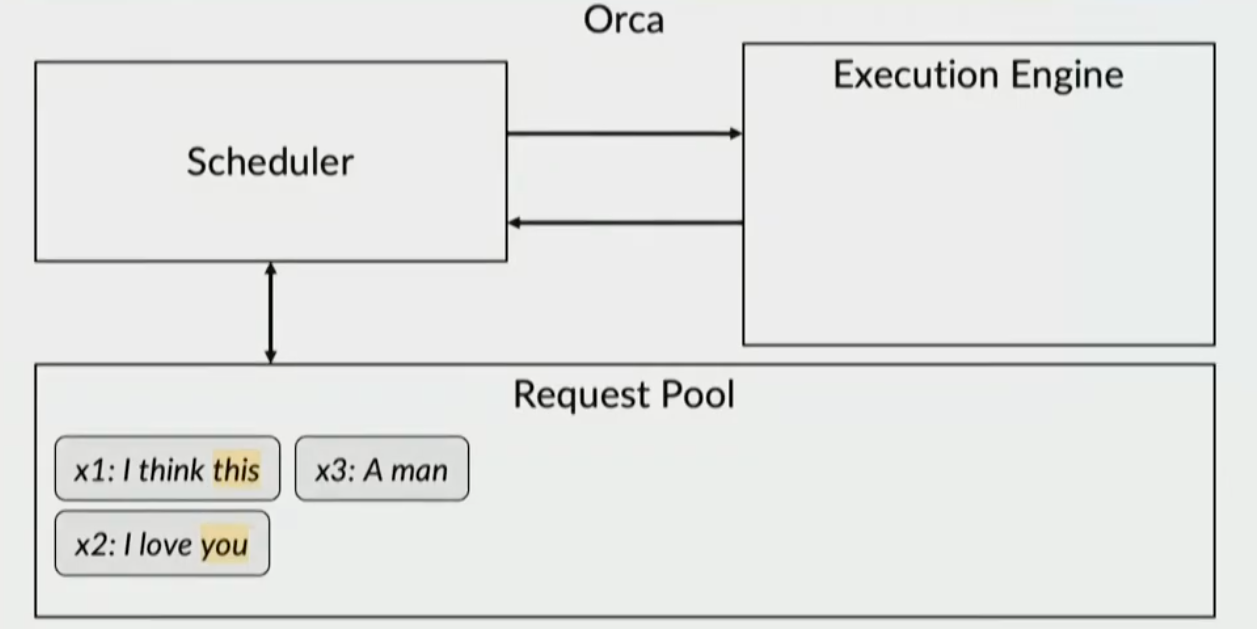

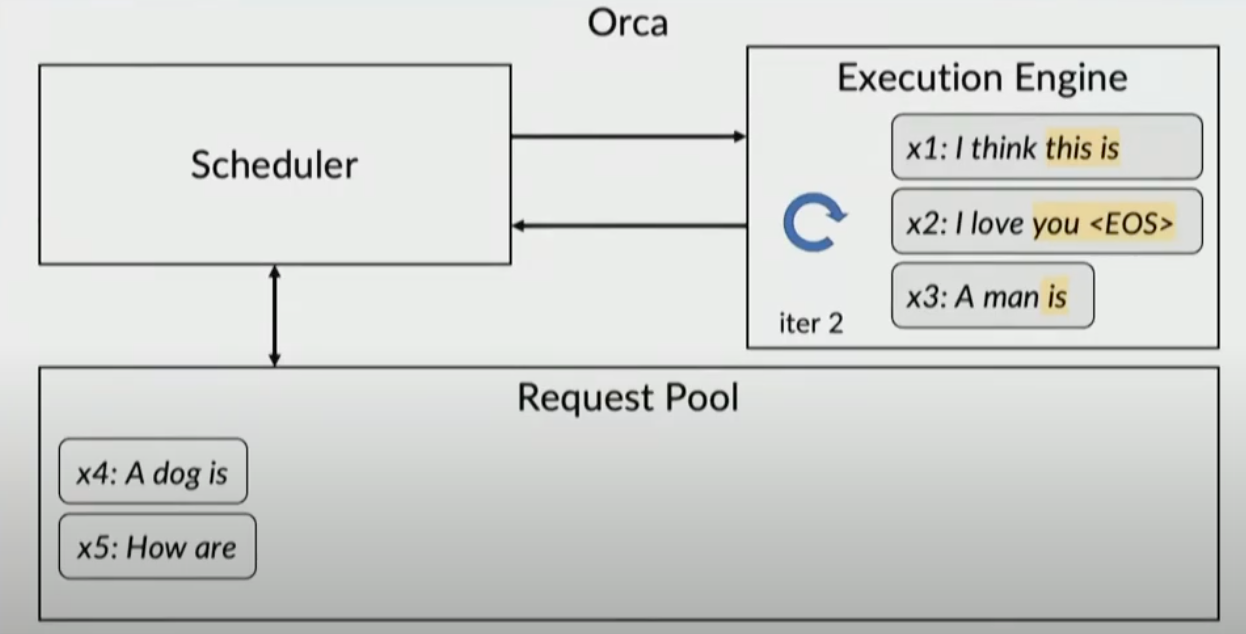

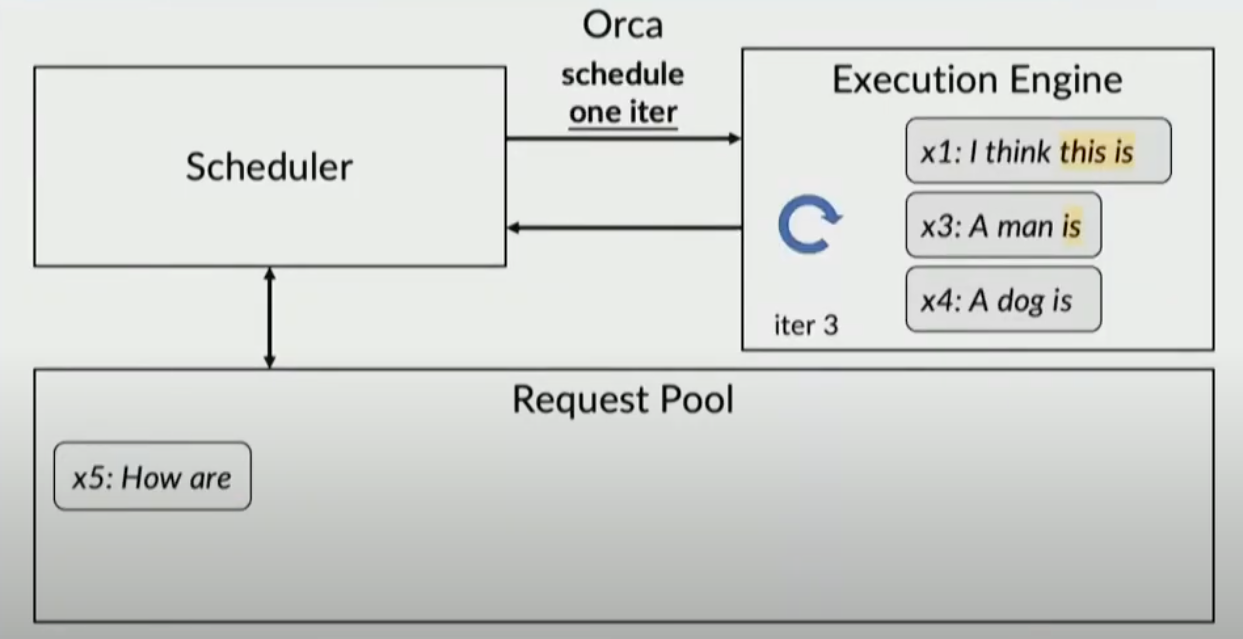

2 Iteration-level scheduling

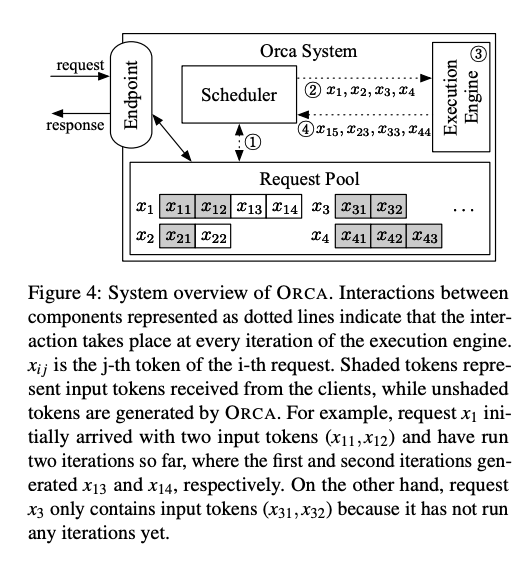

For max_batch_size = 3 case

- Process requests in execution engine (x12)

- Move all requests in request pool together with new requsets (x123)

- Process with new added requests x3

- Finished requests (x2) can send back to reponses and process new added requests (x4)

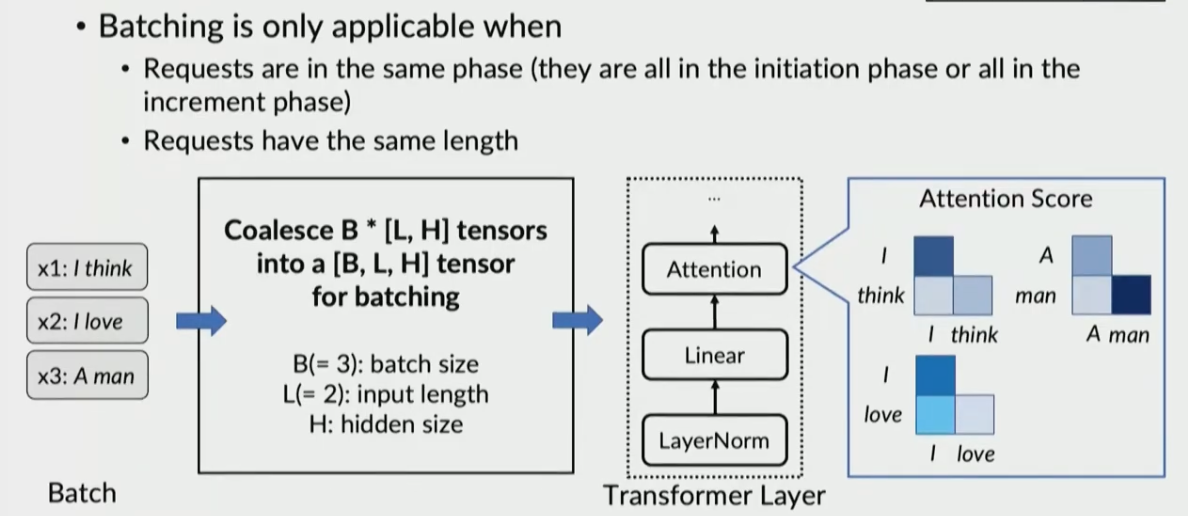

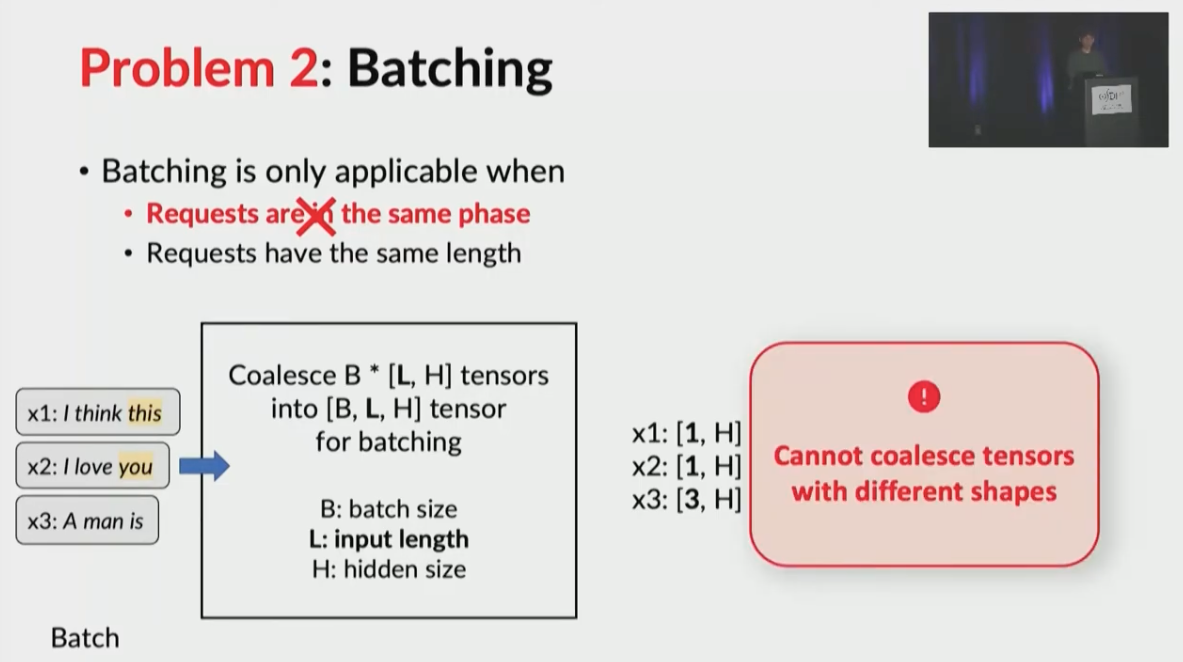

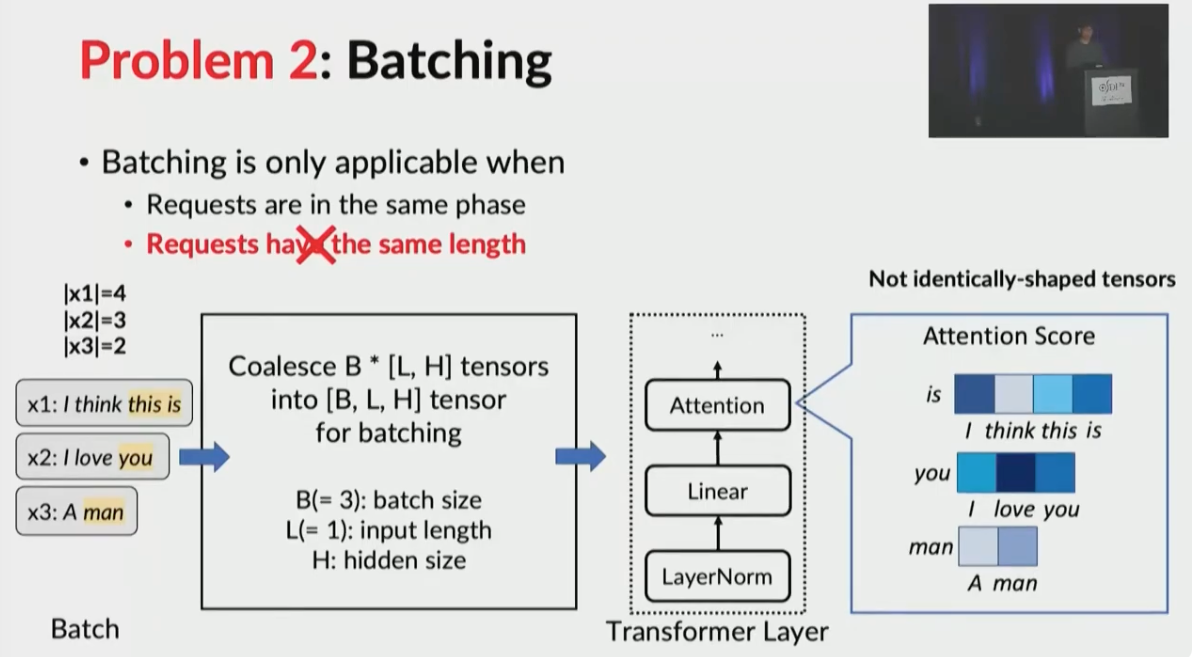

3 Batch issues with iteration-level

For batching to work, we need following criteris

- Batches are NOT in the same phase, prefill vs decode

- Batches have different length

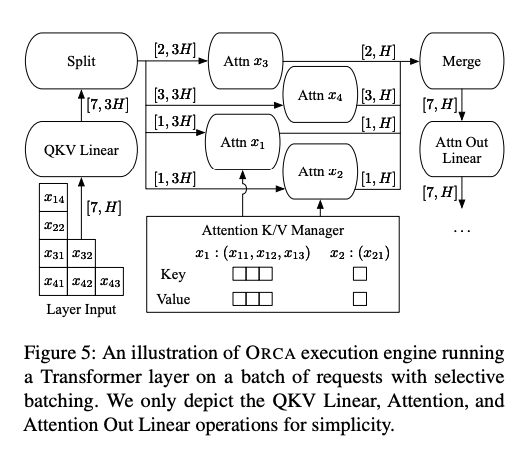

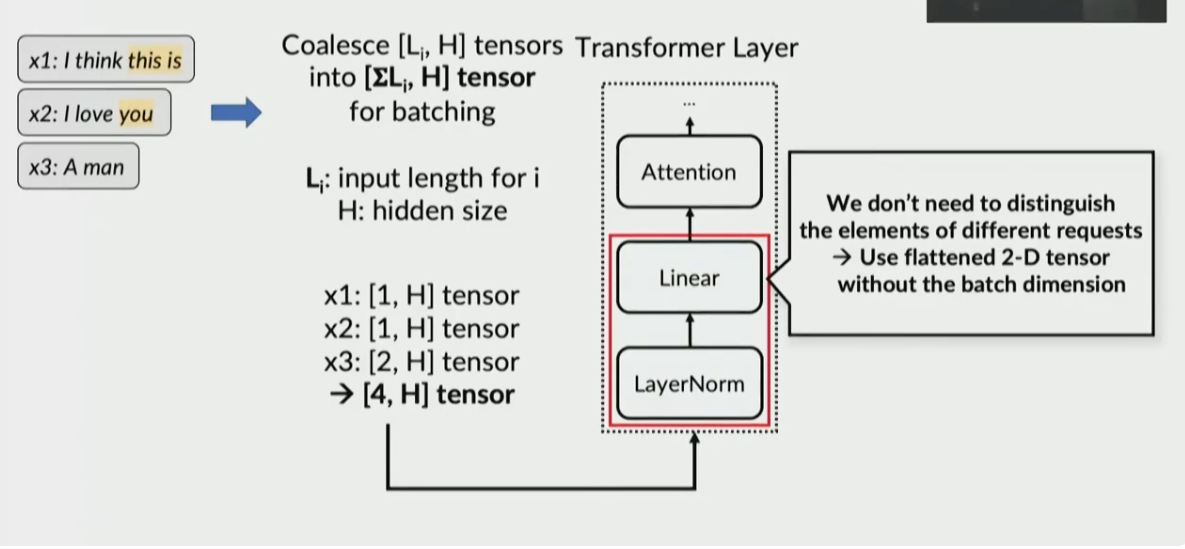

4 Selective Batch

- For LayerNorm and Linear layers, we can concatenate requests to a uniformed processing

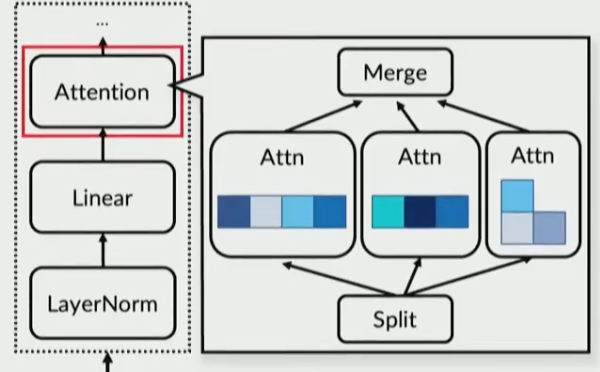

- Split batch and process each request individually and merge the output tensor

5 Orca

Orca is the system with these two features implemented. For conitnuous batching:

For selective batching:

For selective batching: