Flow and Diffusion models Part 4 - Classifer-Free Guidence

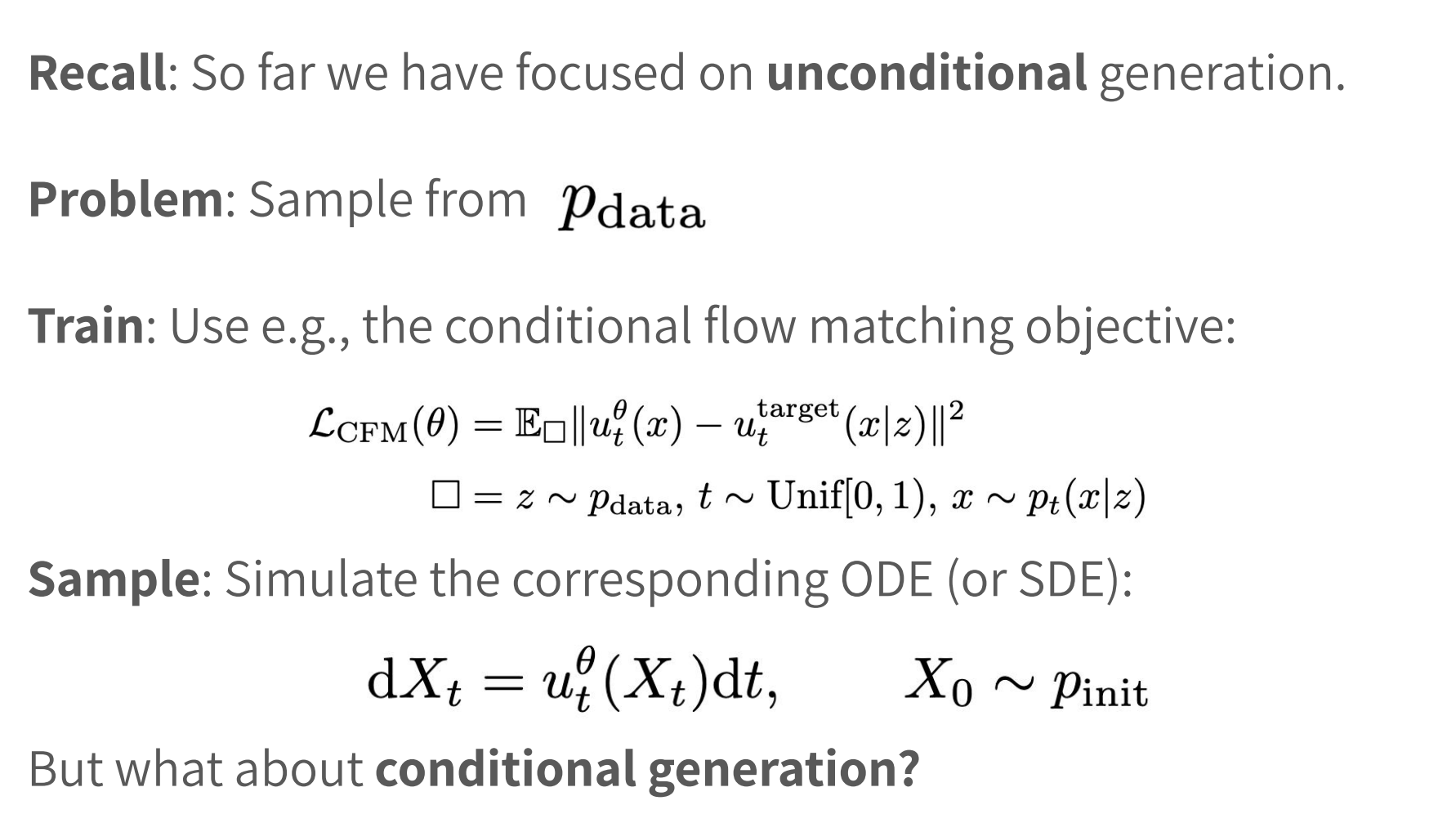

1 Guided Modeling

Going to unconditional to conditional is to add condition on lable y for all the formulas. To avoid confusion, we change the wording to guided and now is to find the loss function of it

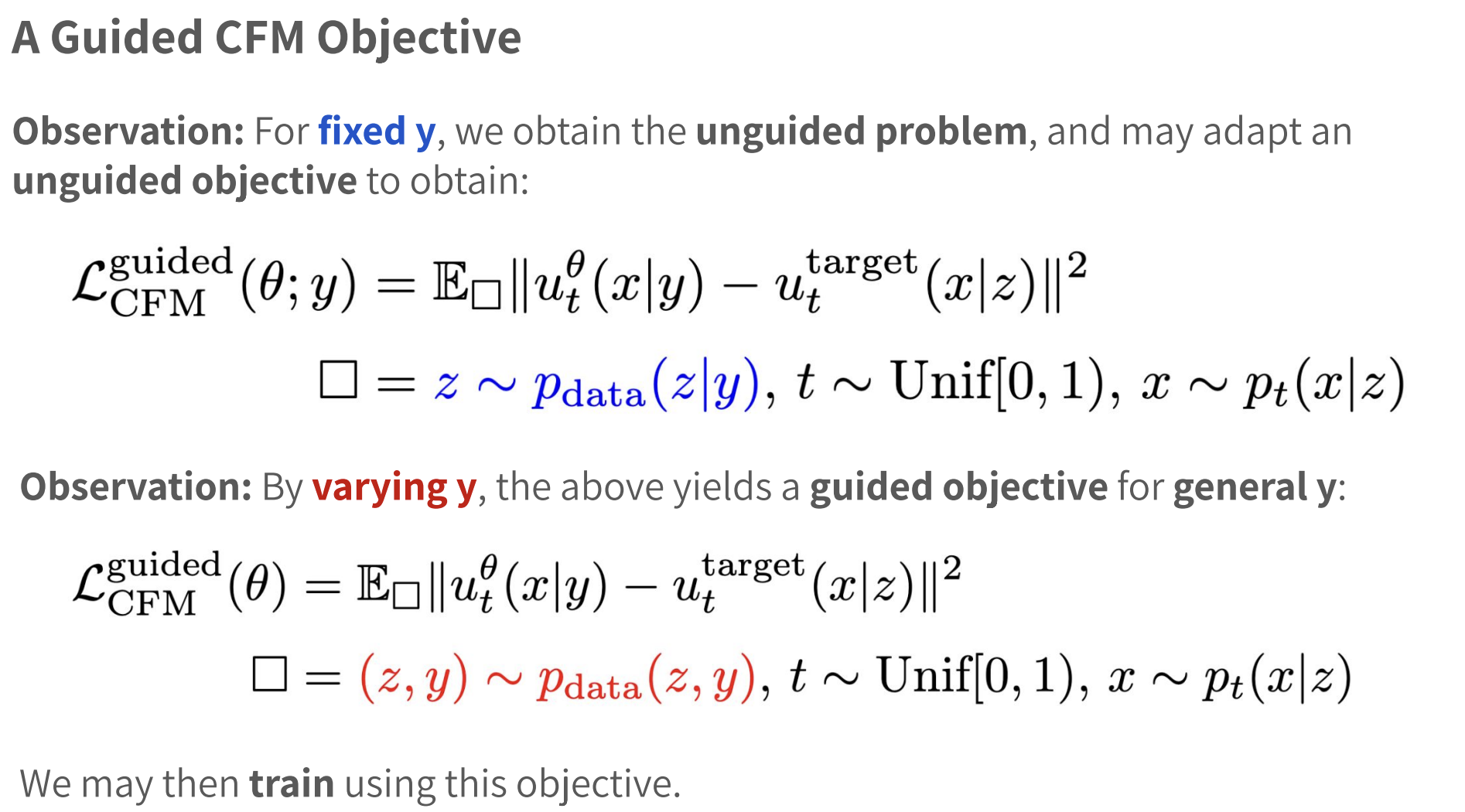

By fixing y first to reuse the unguided formula, and varying y to get the guided version by using conditional probabilities.

2 Classifier Guidance

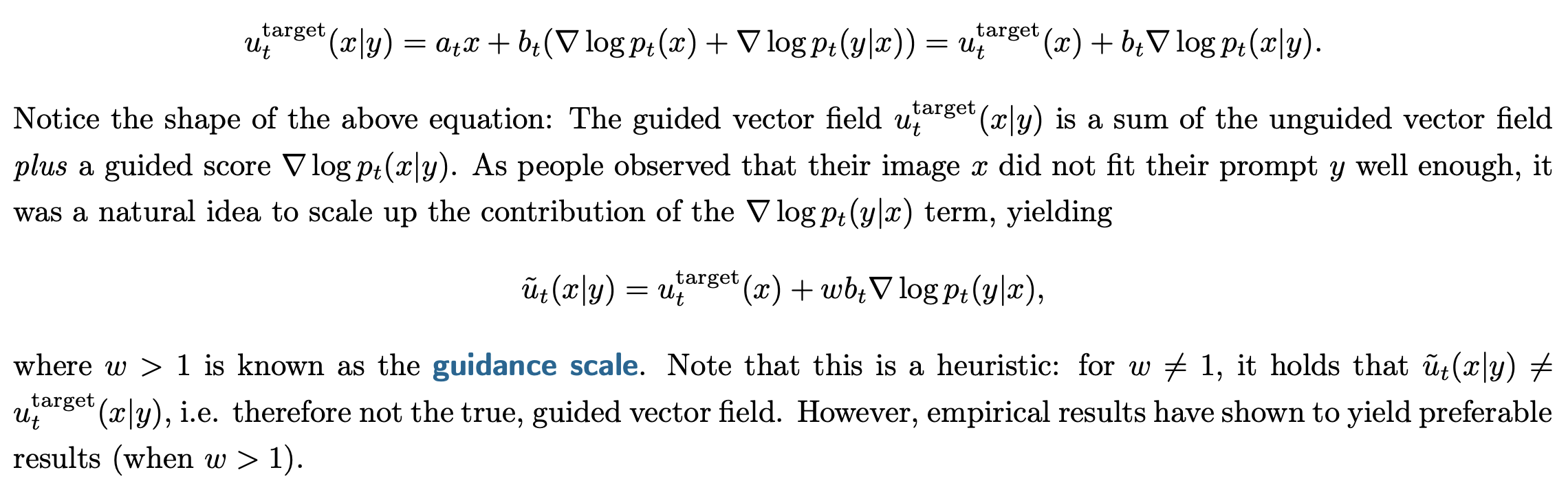

The method above was soon empirically realized that images samples with this procedure did NOT fit well enough to the desired label y. The perceptual quality is increased when the effect of the guidance variable y is artificially reinforced. Here is how we can enhance the effective of y.

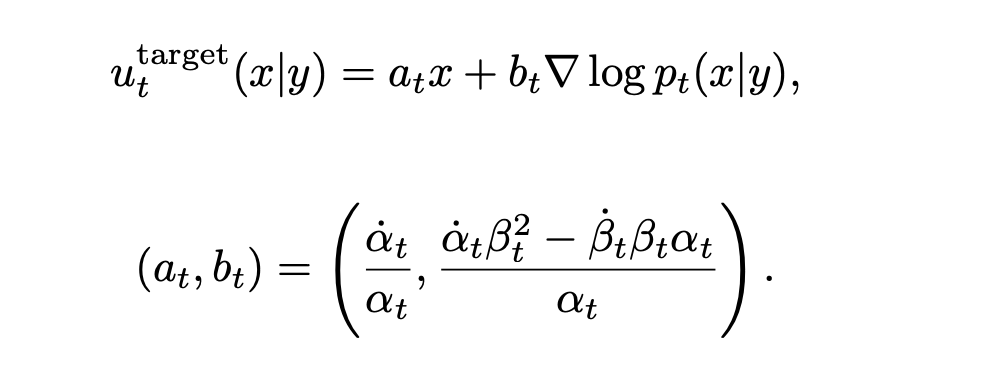

First recall the relationship between vector field and score function for Gaussian conditional probability path.

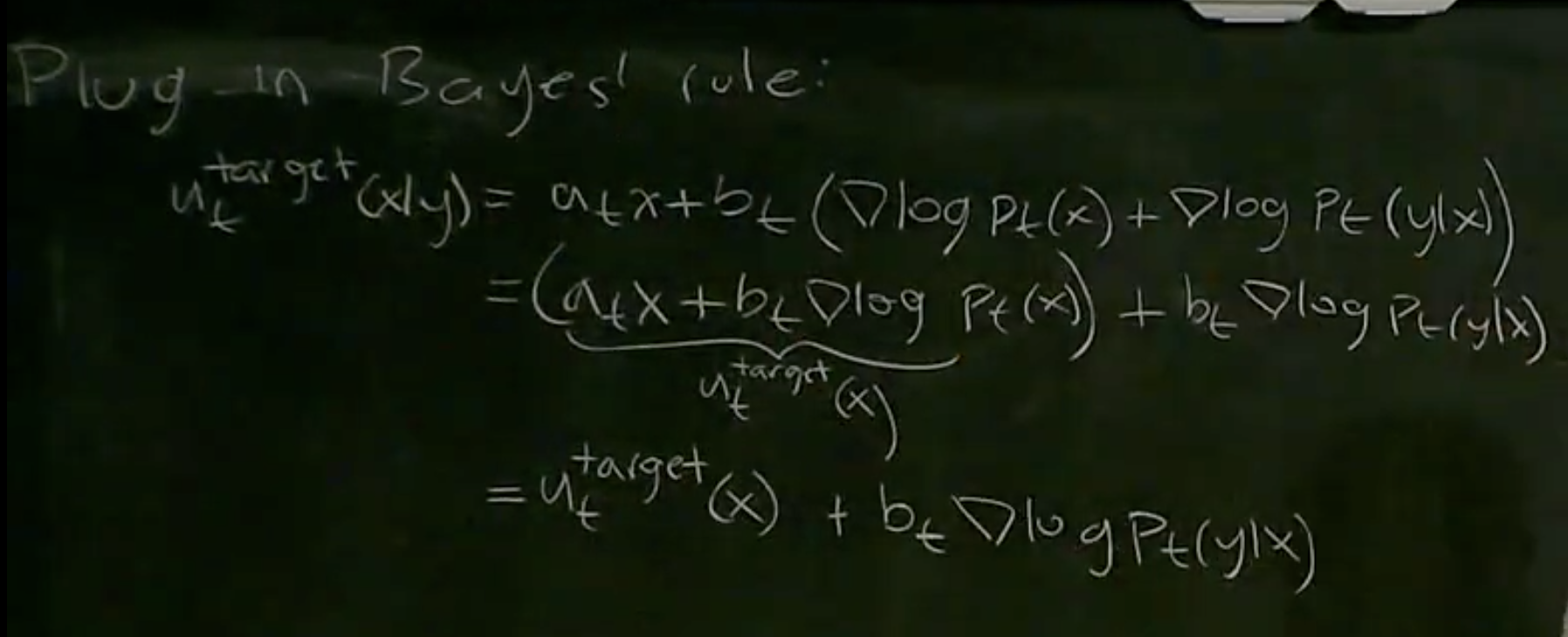

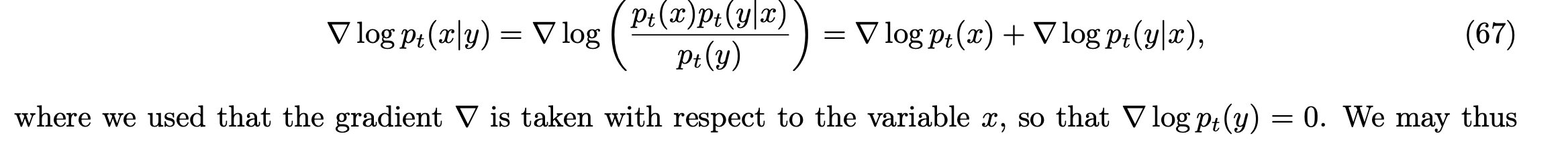

Simply pluging Bayes’ rule, and notice gradient is respect to x only, so we can get $\nabla{\log{p_t(y)}} = 0$, thus

Here $\nabla{\log{p_t(y|x)}}$ is sort of a classifer.

Here $\nabla{\log{p_t(y|x)}}$ is sort of a classifer.

Early works actually trained a classifer, and this leads to classifier guidience method.

2 Classifier-Free Guidance

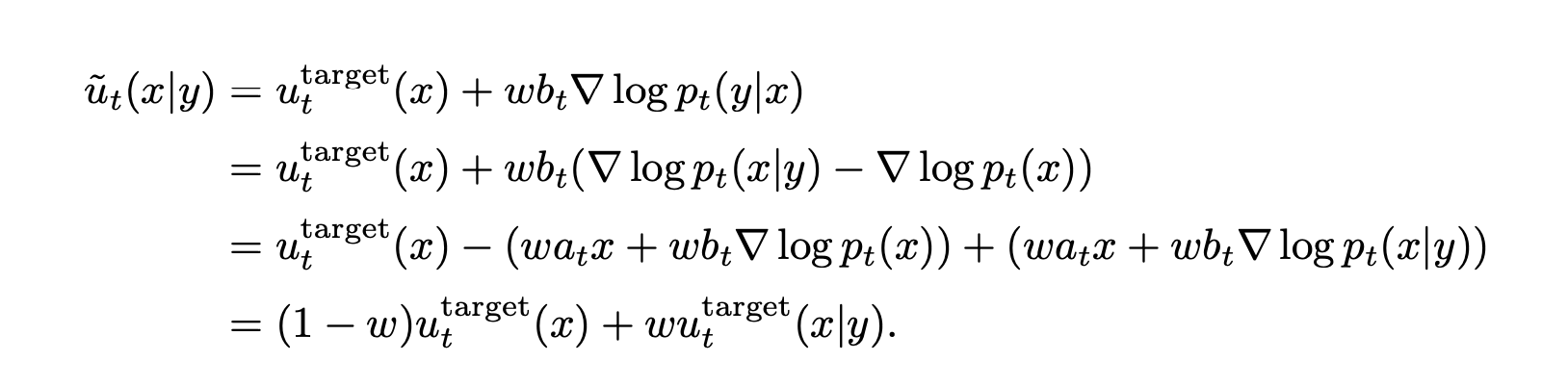

The key conversion to get classifier-free formula is just applying this Bayes conversion.

and some algebra can totally remove the classifier term

and some algebra can totally remove the classifier term

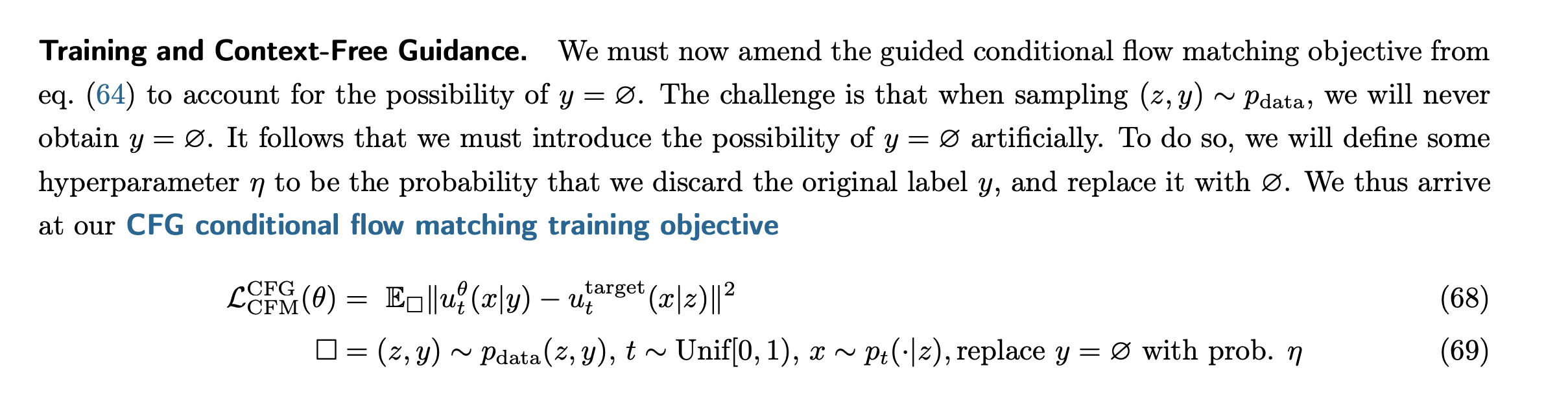

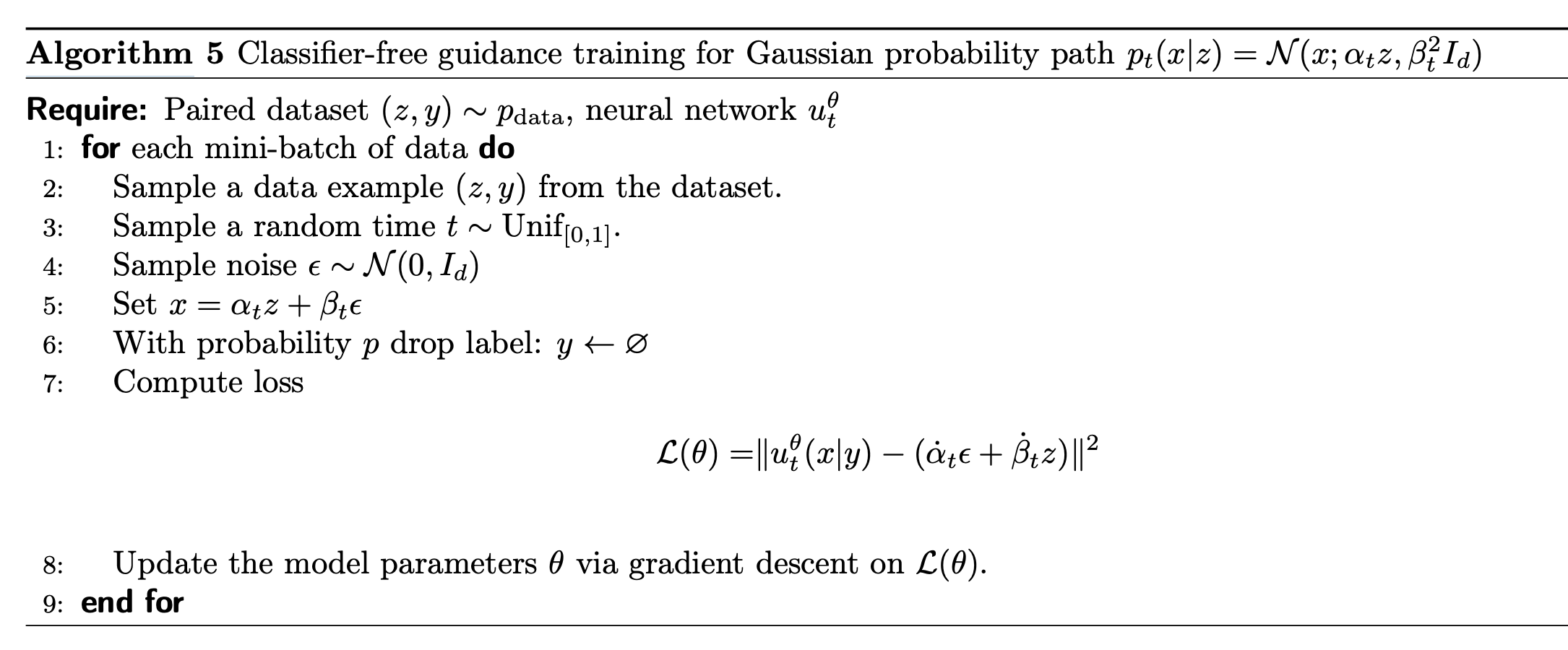

In practice, instead of training two models, we can converge into a single model by introducing a nothing class

In practice, instead of training two models, we can converge into a single model by introducing a nothing class

Here is the summary of the training process, which is referred to CFM training we derived before

3 CFG for Diffusion process

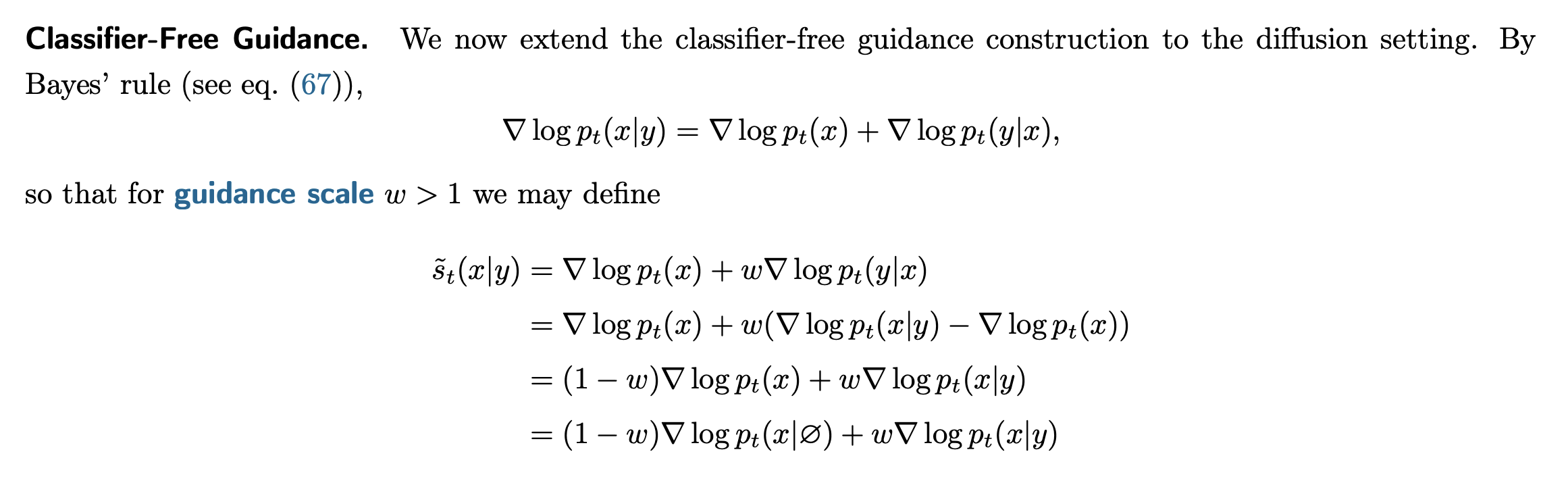

The derive for score matching and diffusion process is actually more easiy, directly play with the Bayes formula

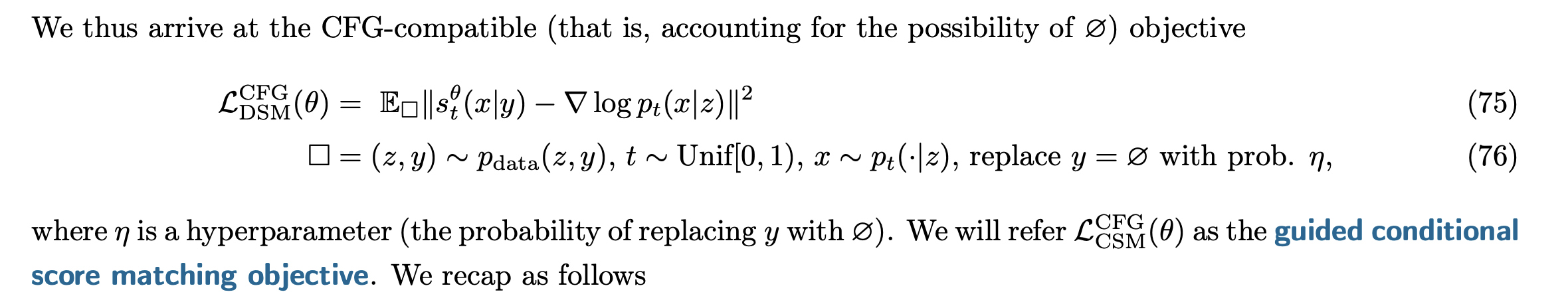

So the CFG for diffusion score matching is

So the CFG for diffusion score matching is