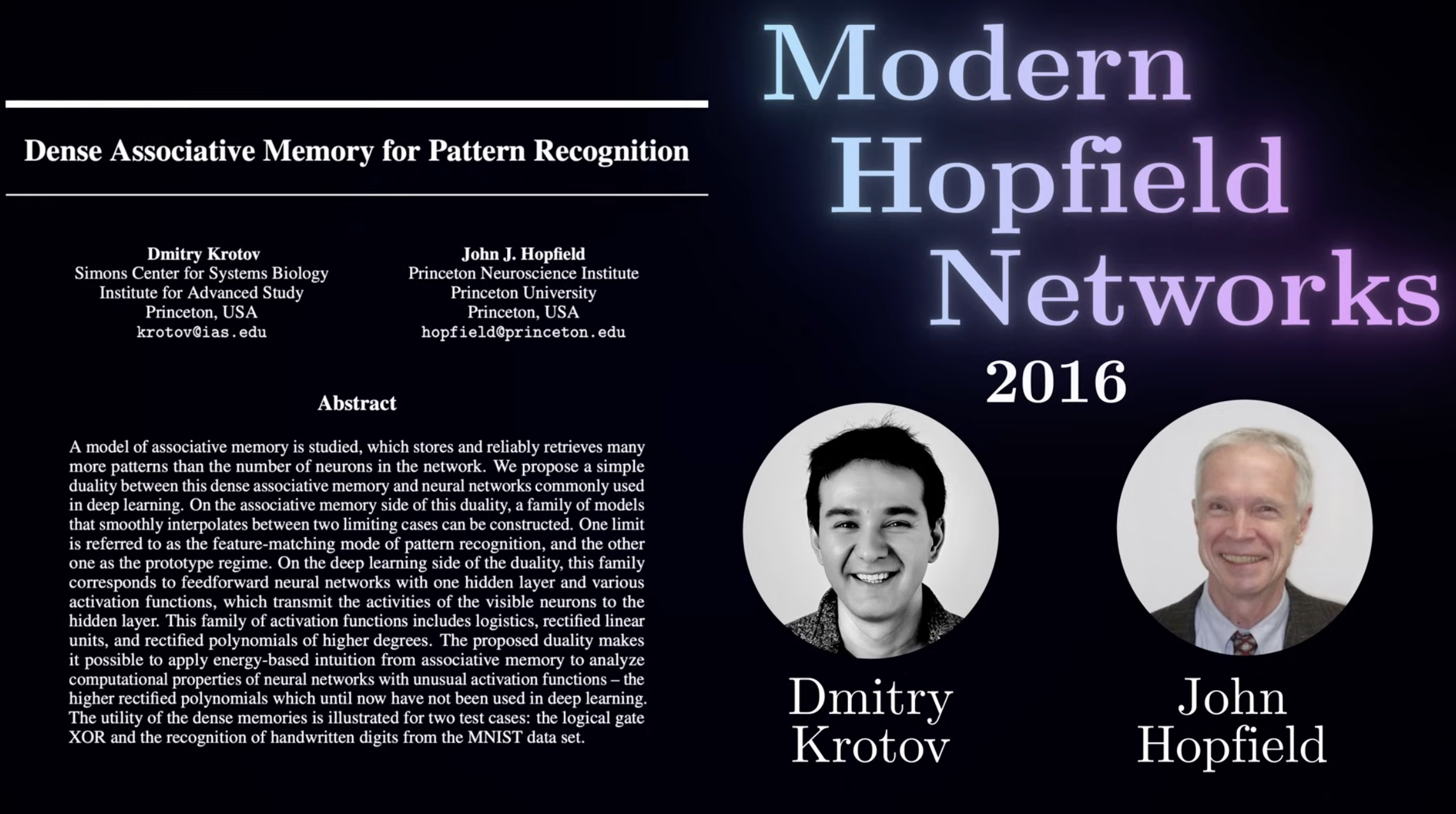

Hopfield Network

Hopfield and Hinton won Noble Prize for Physics this year. Big surprise! I found this video explains what’s Hopfield’s work. It gets me to think how NN is invented, and it’s actually much more complicated than it looks.

0 How Memoery works

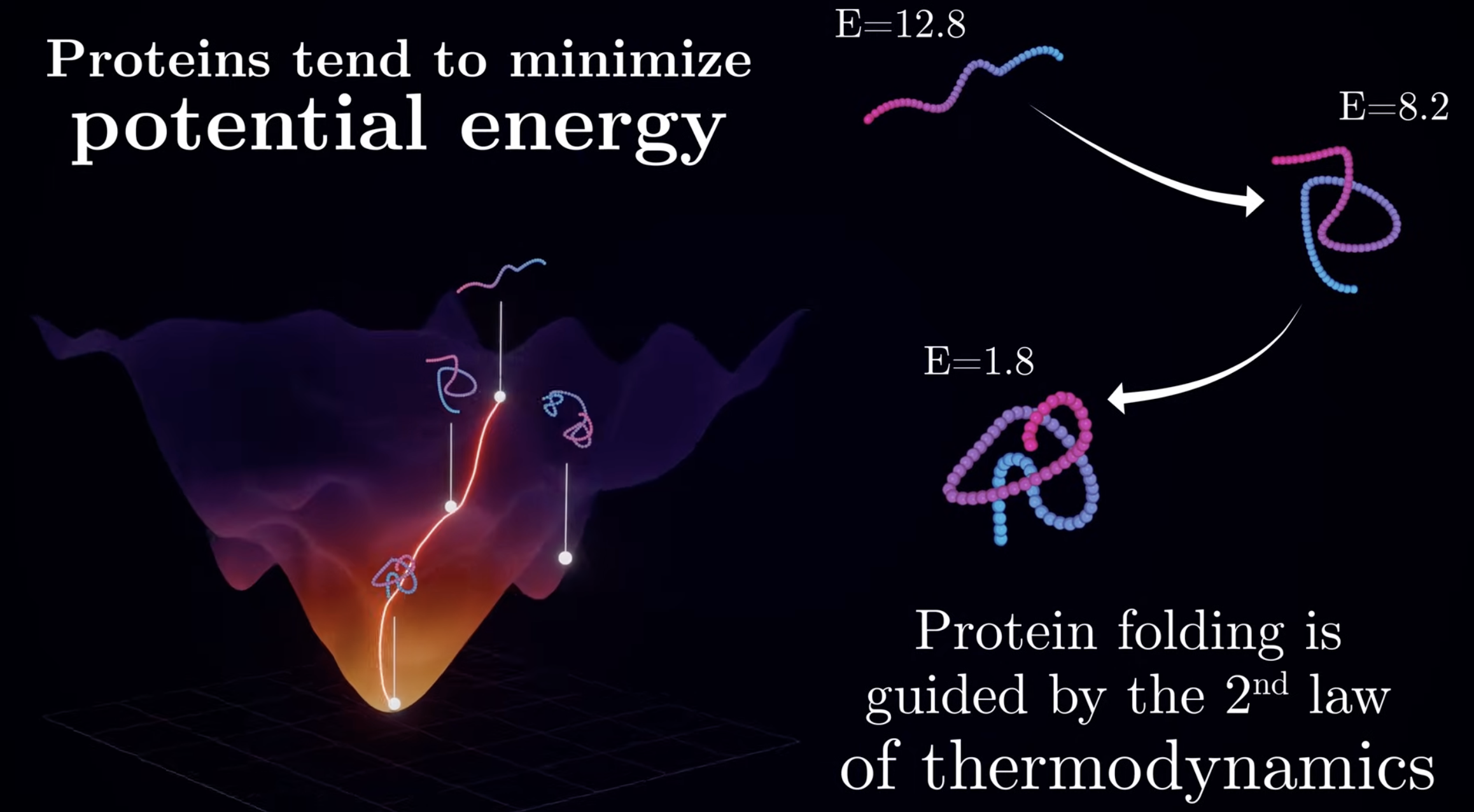

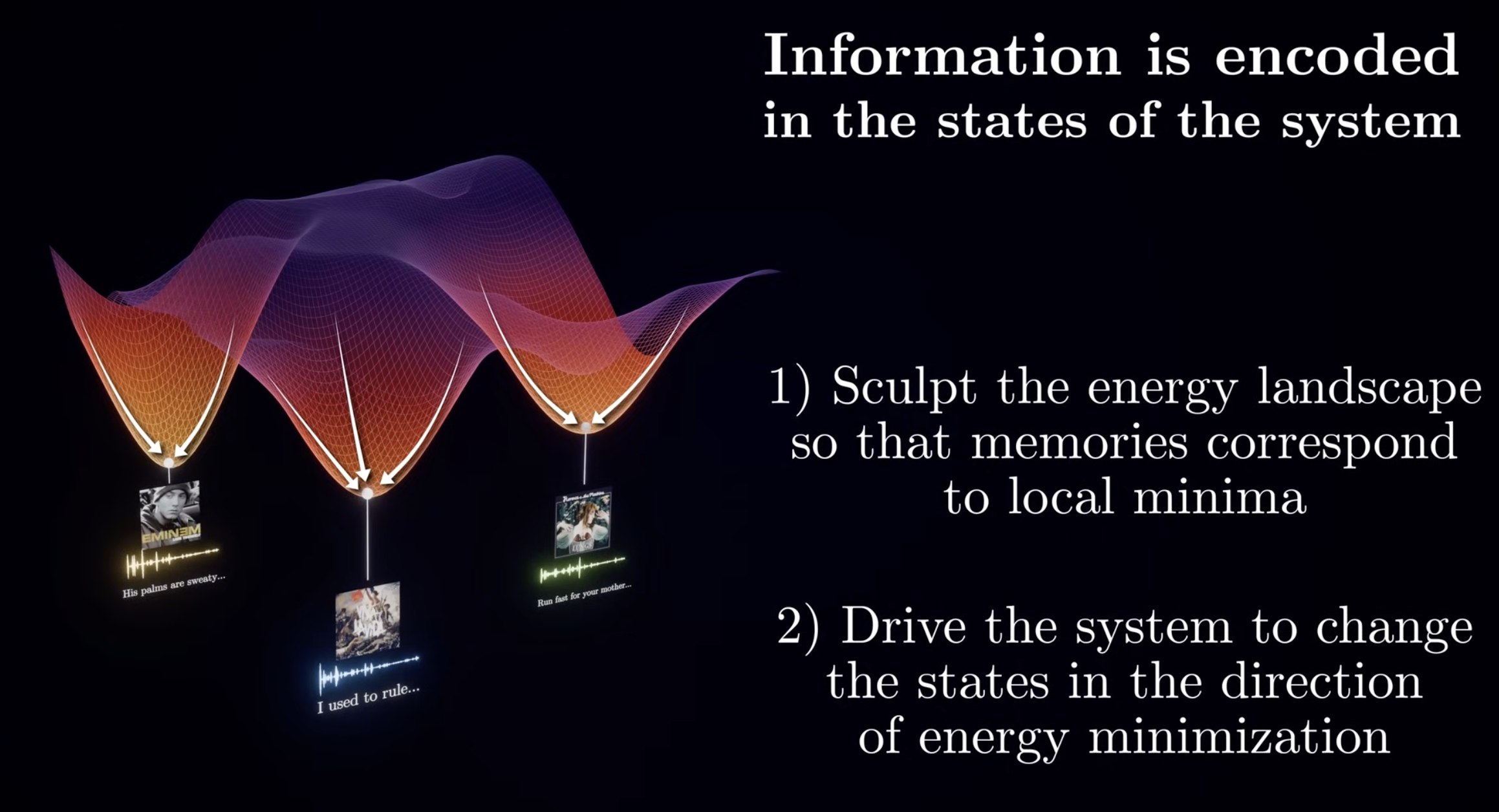

How do we recall a music melody by just hearing the beginning of yet. Do you search all the brain memoery for a matching pattern? This is surely too slow. Use protein folding as an example,protein get it’s proper folding by finding the minimum energy form.

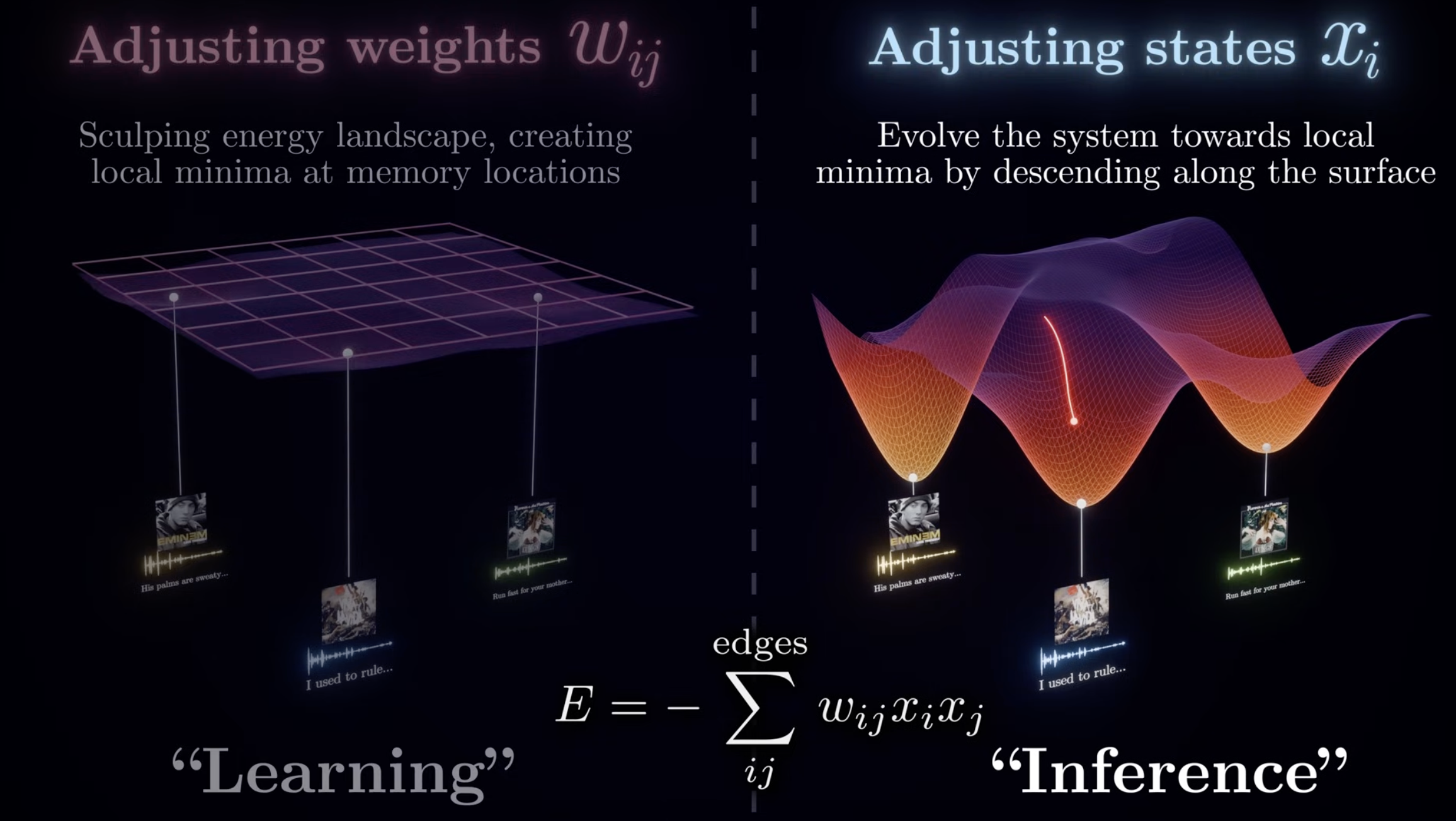

So the engergy based search method is how Hopefiled get inspired to design the Hopefield Network. Now we need to design a system that can

- Have a proper energy landscape

- Can change states for fast search

1 System Setup

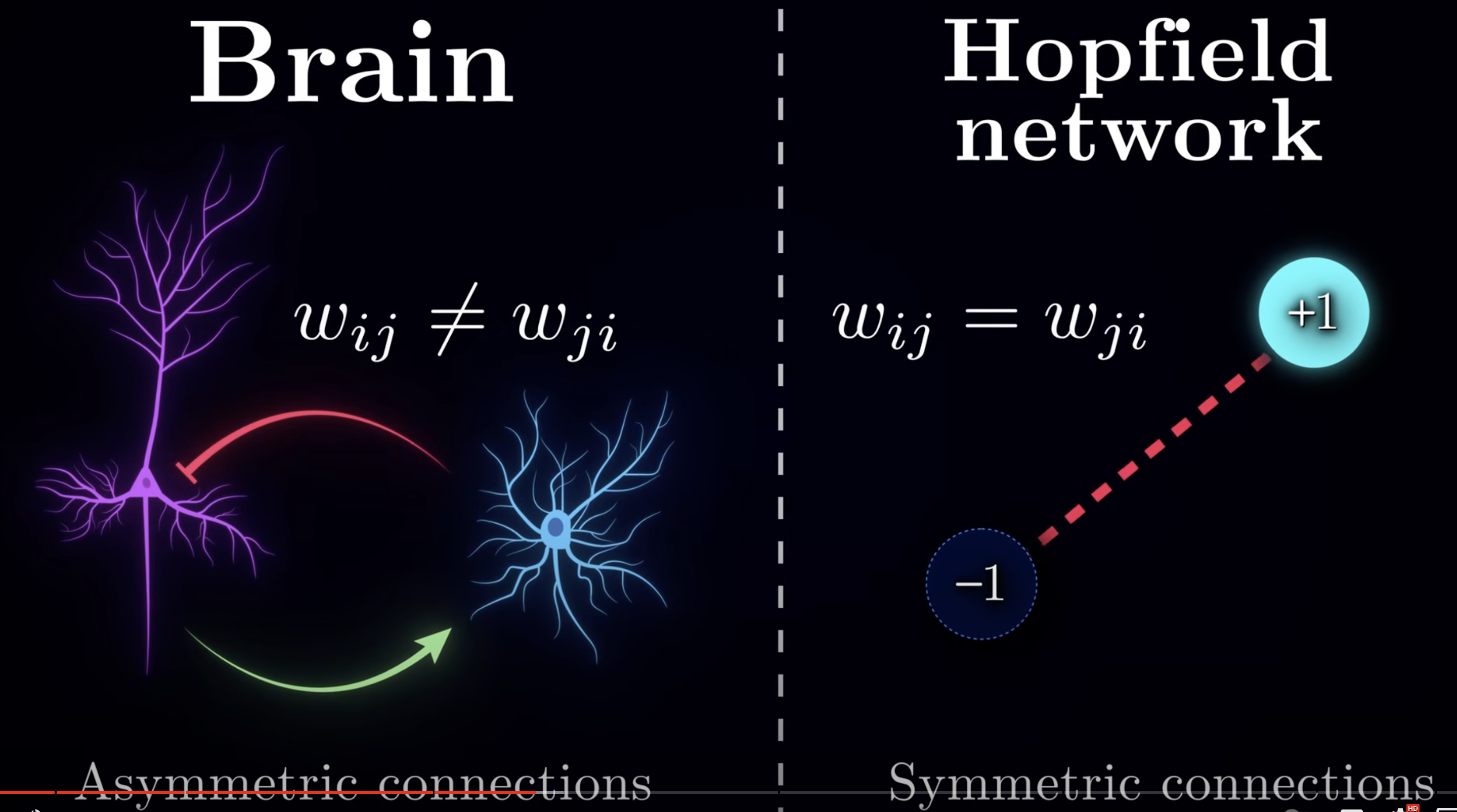

Assume neurons are only binary and connections are symmetics, which is not how brain is. (A future improvment?)

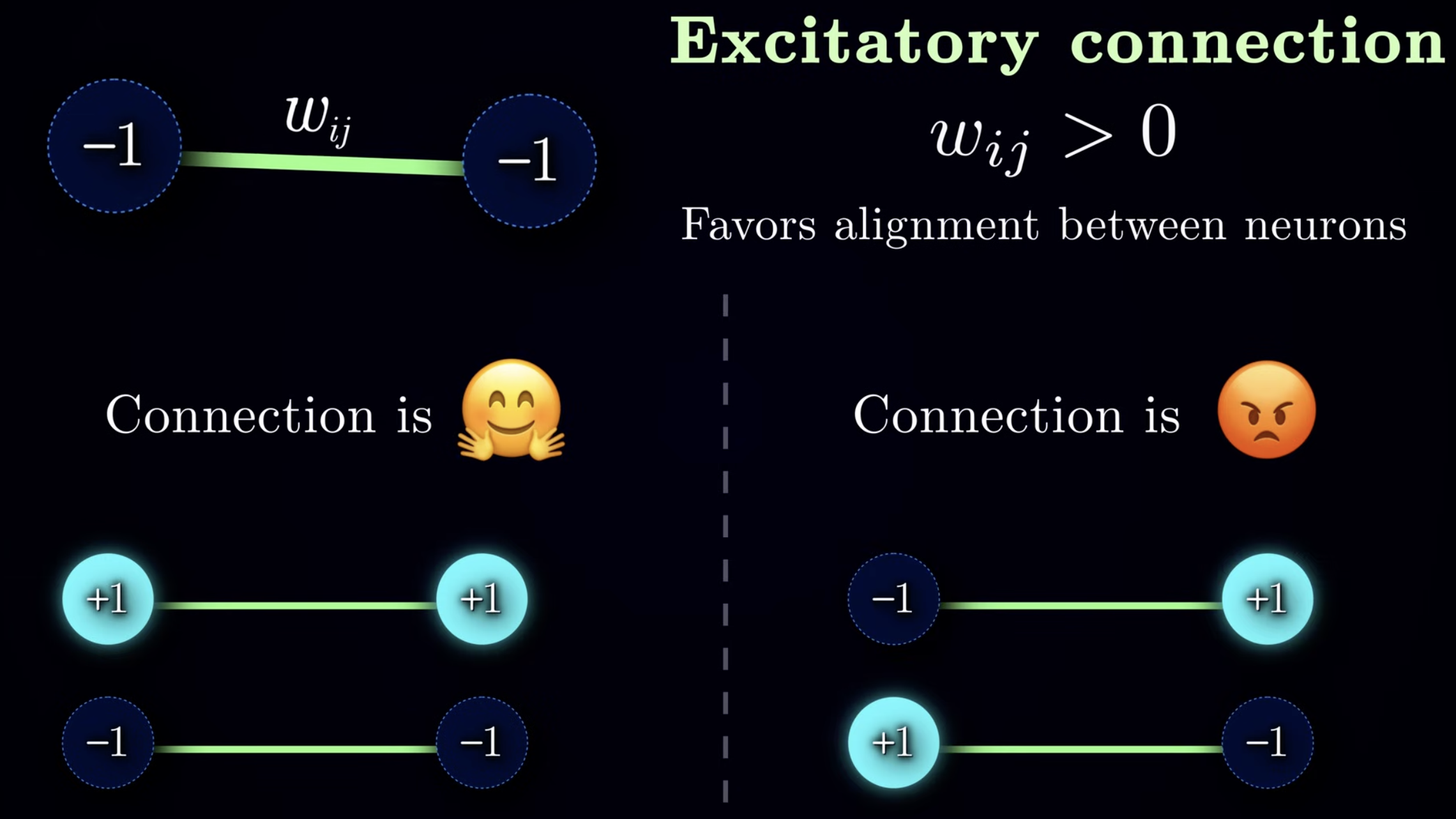

We design weights in such a way that it favors alignments when positive

We design weights in such a way that it favors alignments when positive

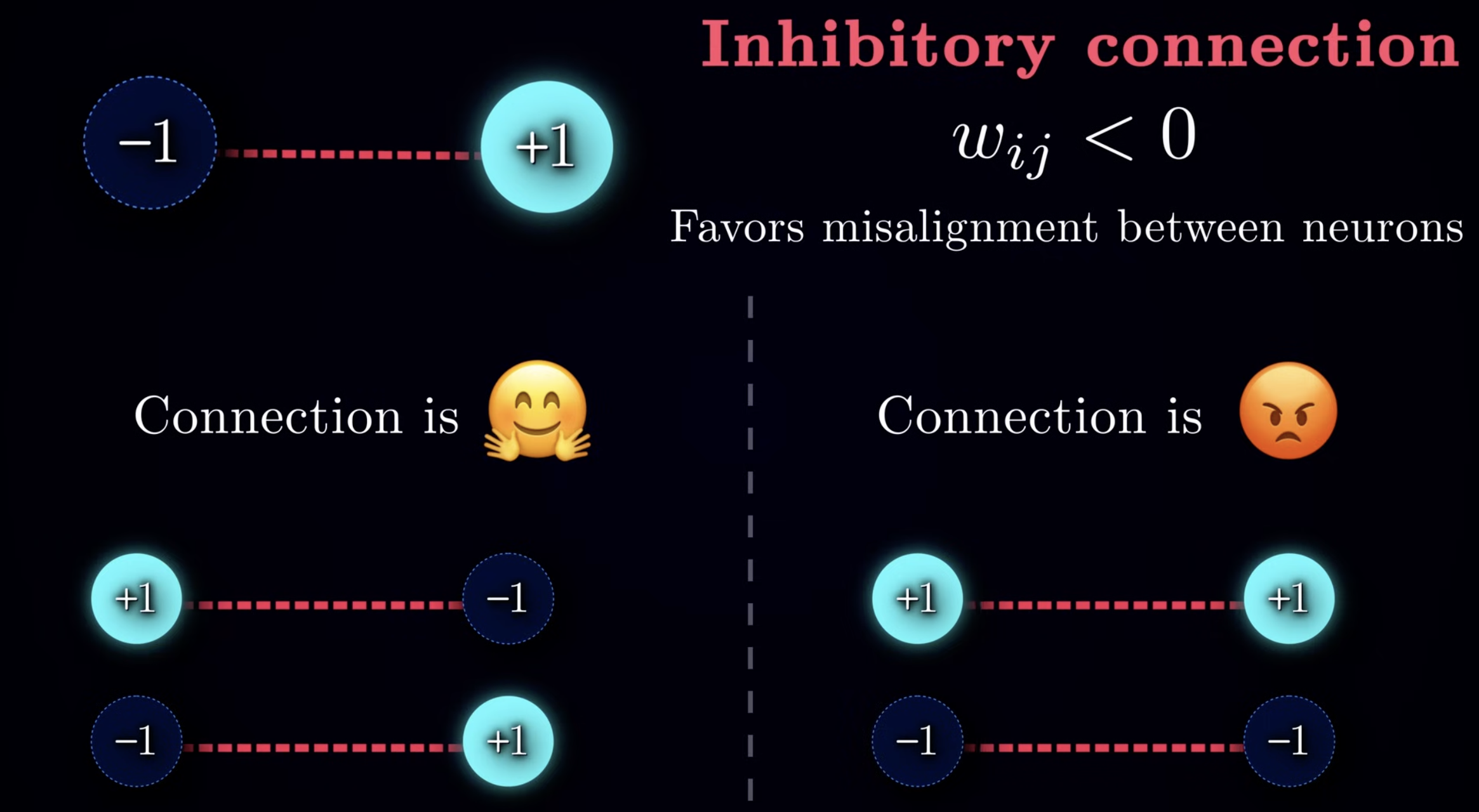

and favors misalignments when negative

and favors misalignments when negative

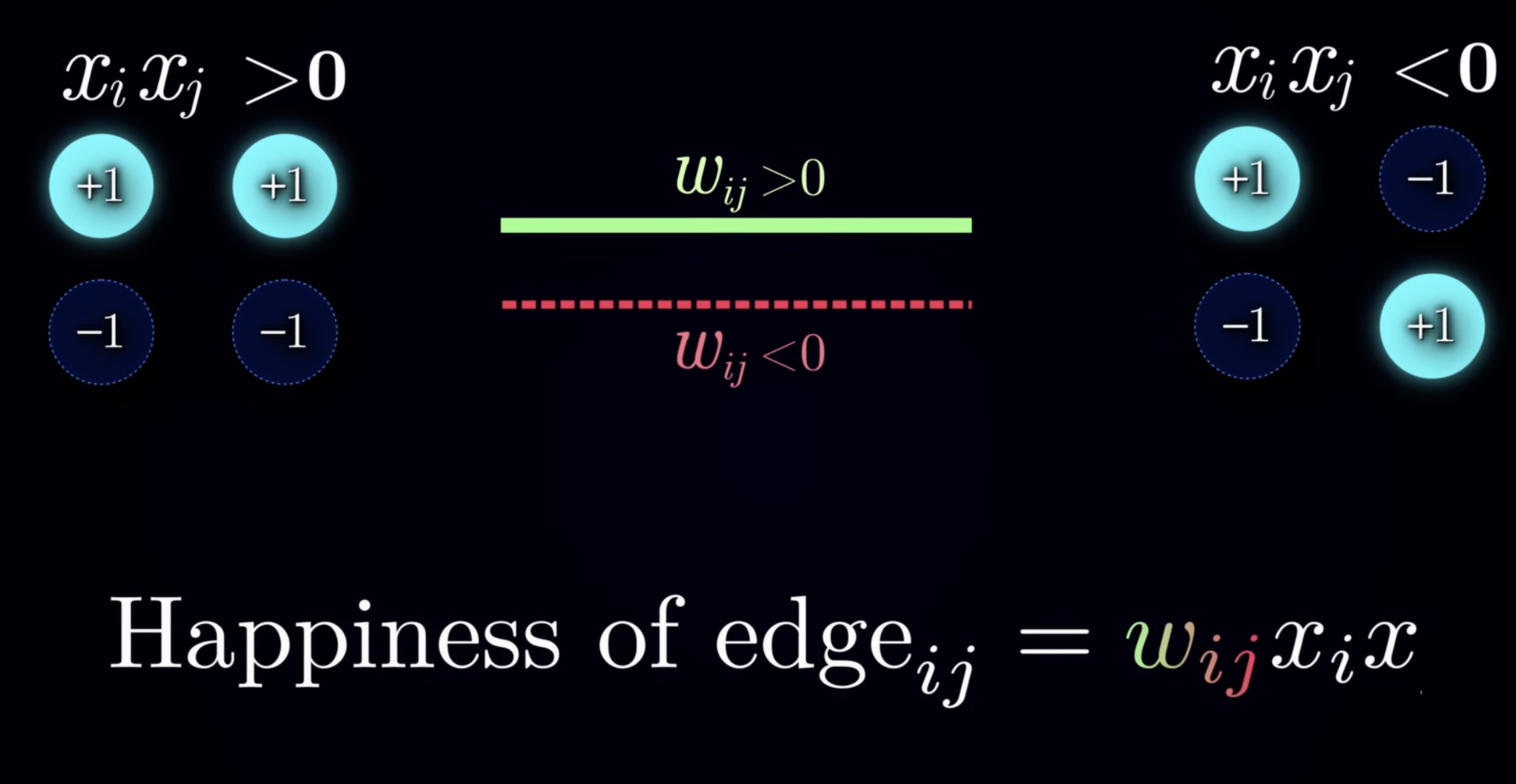

Thus, we can calculate the “Happiness” of the networks and the goal is to maximize it.

Thus, we can calculate the “Happiness” of the networks and the goal is to maximize it.

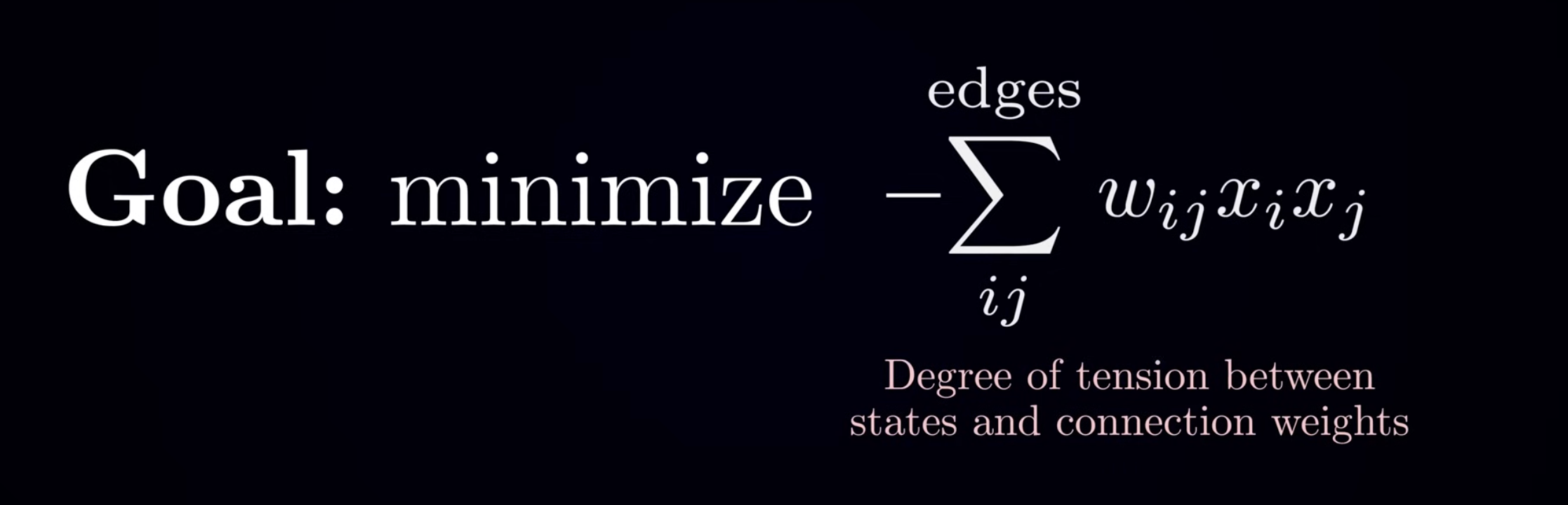

And it’s the same as minimize the recip of it

And it’s the same as minimize the recip of it

2 Inference

The inference of this network is to find all X, which refers to memory footprint. This is very different from current NN inference.

In pracitce, we already know part of X, (like hearing the beginning of a melody), and try to recall the rest of it

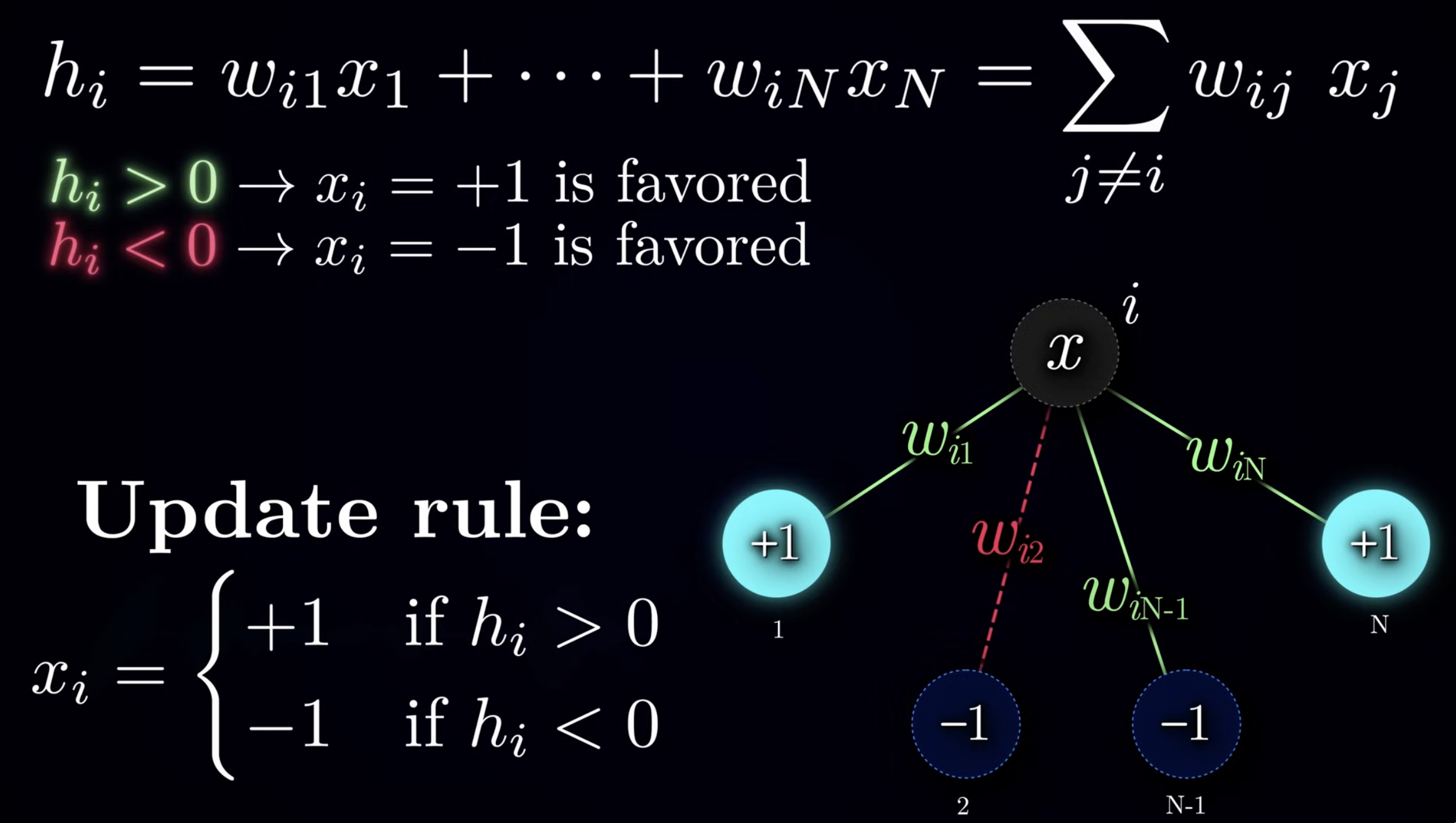

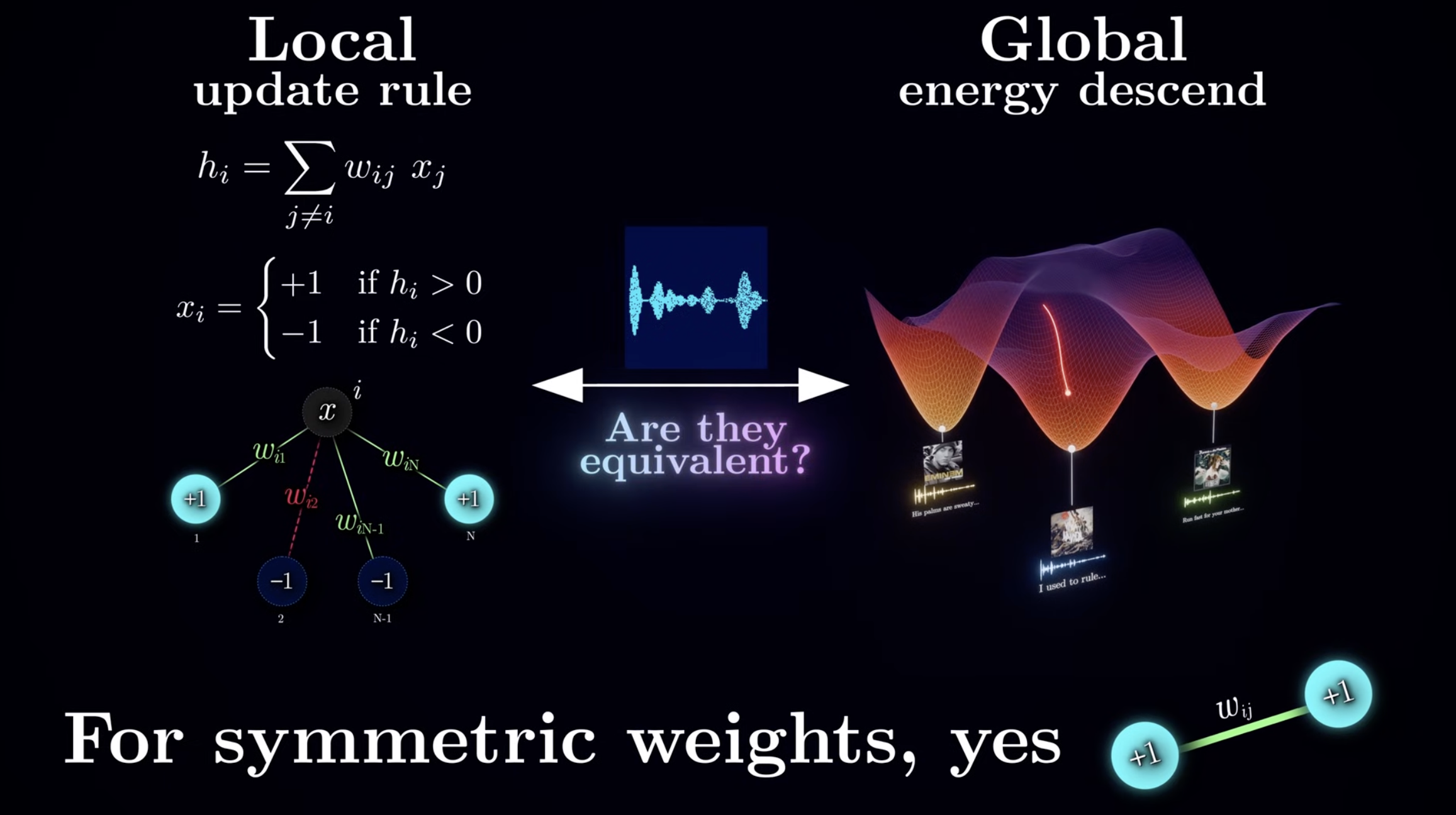

The update rule can be straightforward.

The update rule can be straightforward.

and we can approve that local and global minimum are the same as long as we have symmetrical connections.

and we can approve that local and global minimum are the same as long as we have symmetrical connections.

3 Training

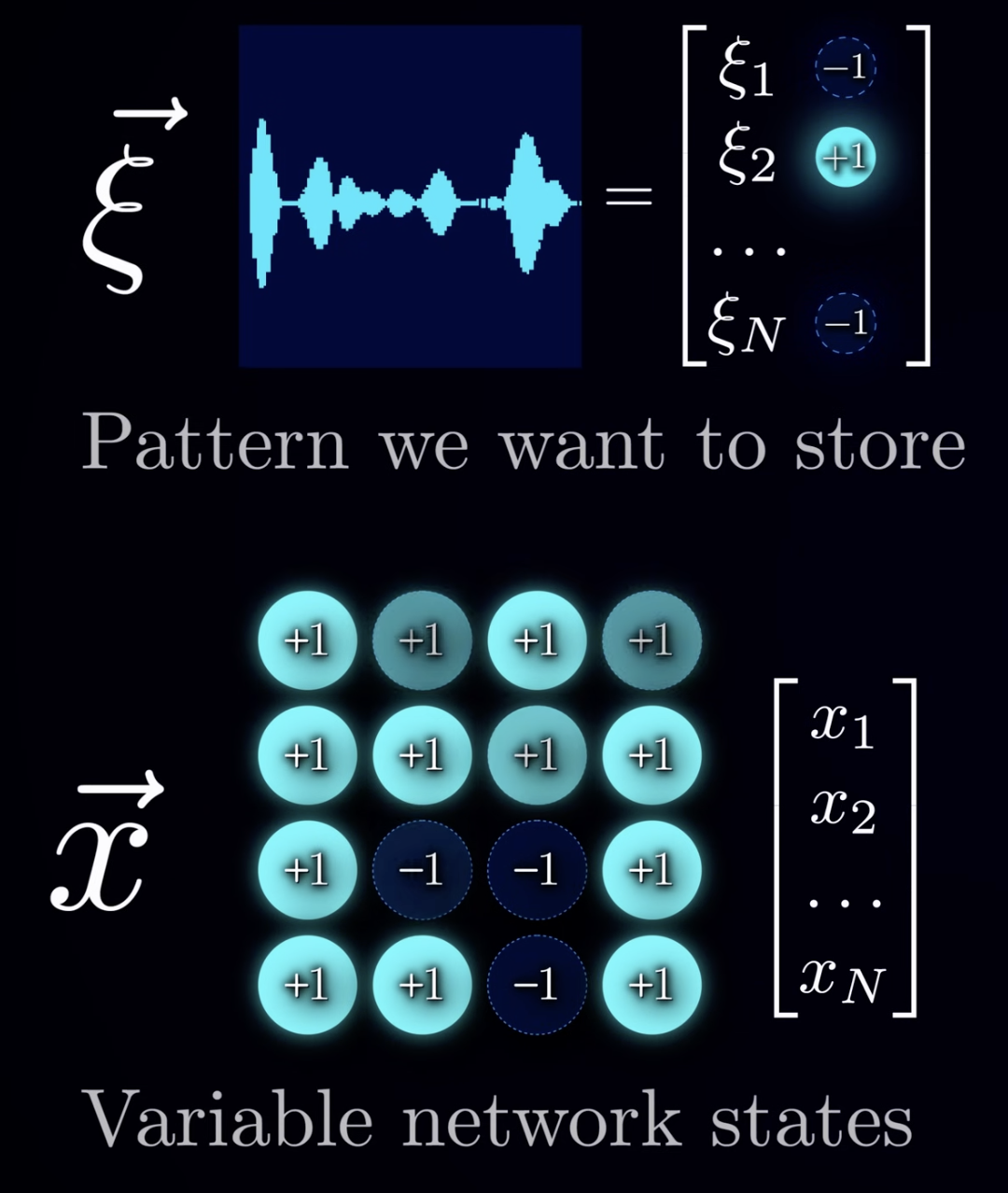

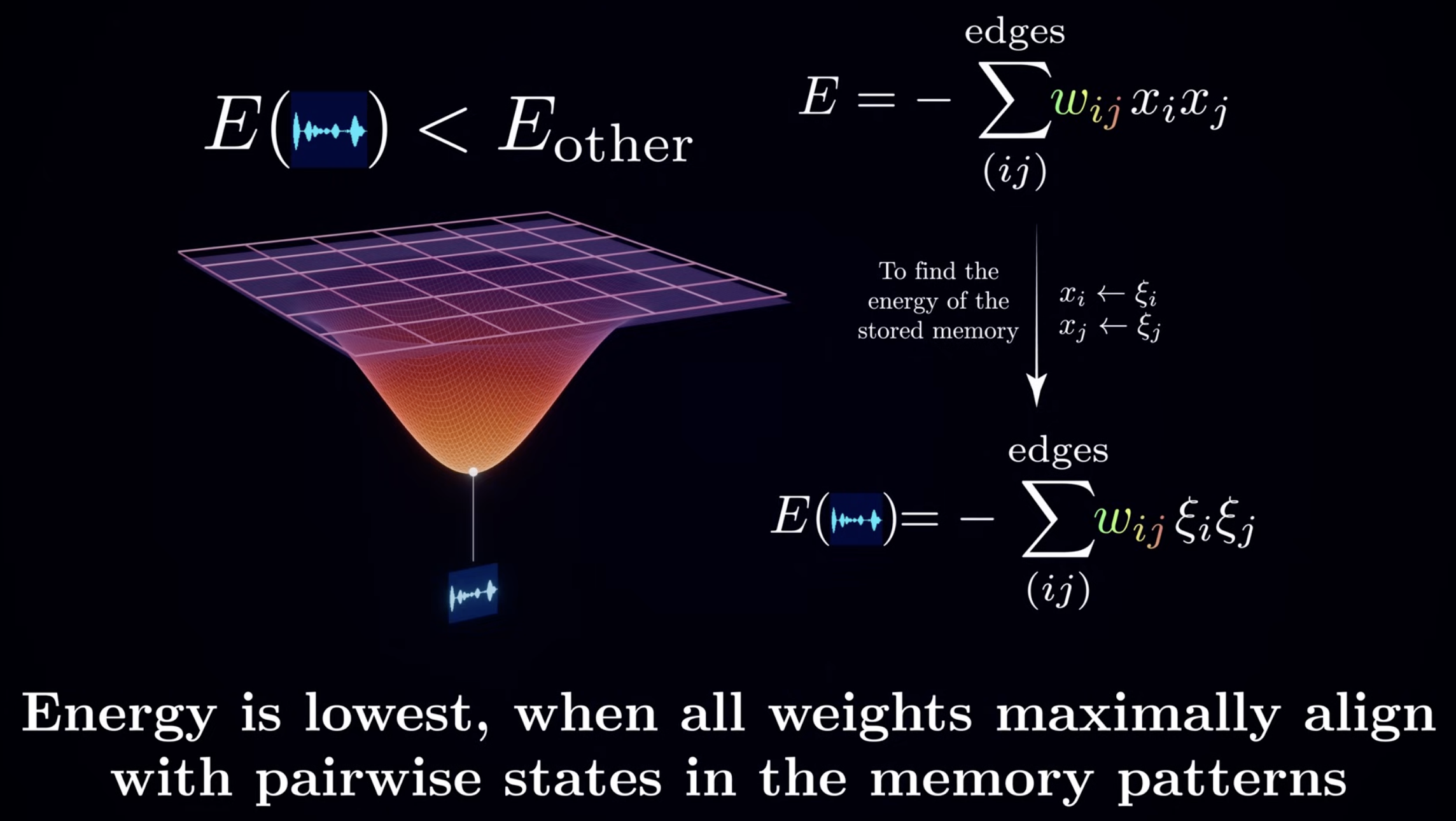

The training step is to find all the weights of the network. And the training data is the marked $\xi$ as below, versus $x$ is the general network status.

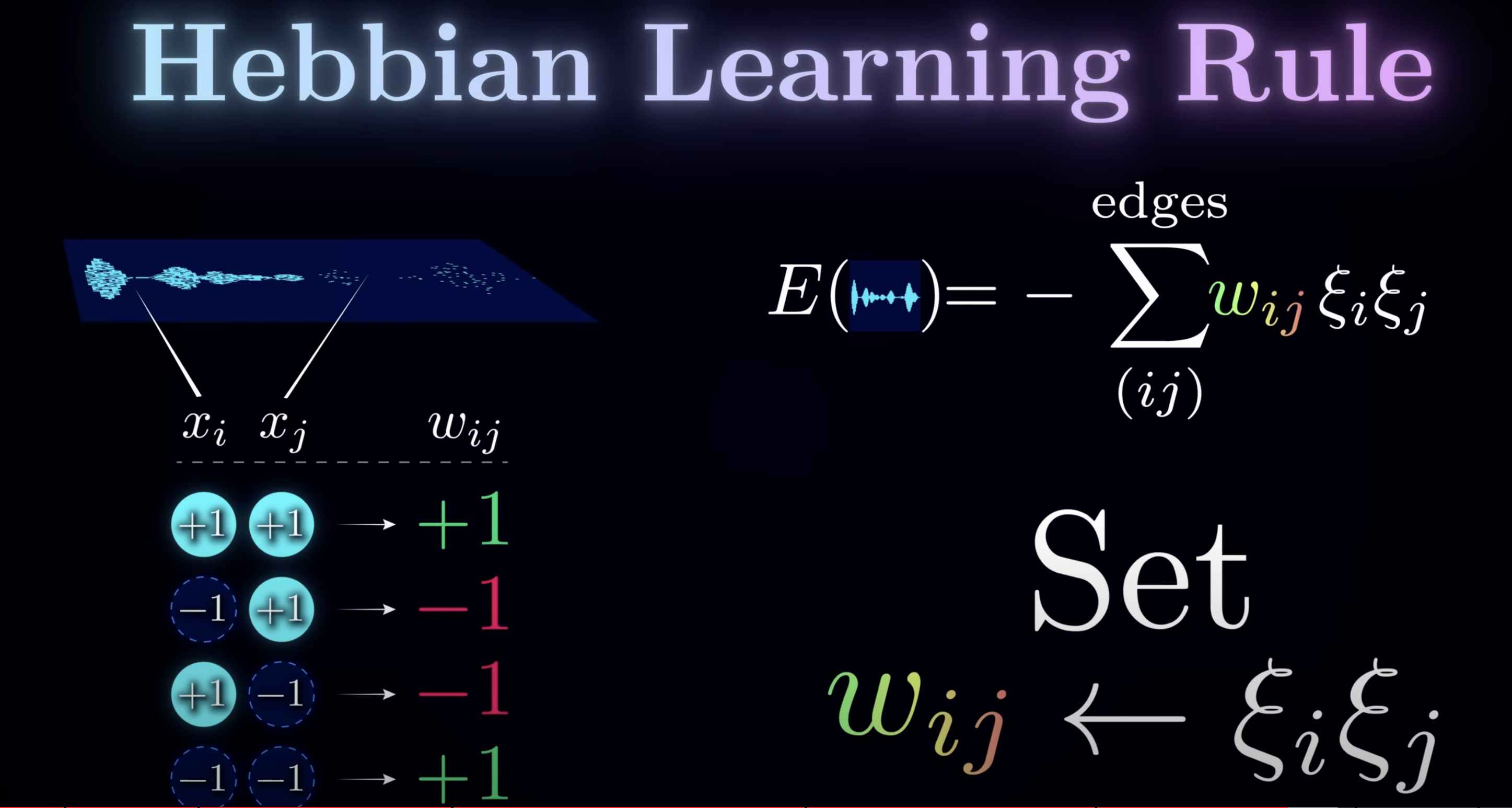

Let’s start with one training data case. In order the make the energy of the memory to be lowest, we can just set weight same sign as the product of two neurons connected.

Let’s start with one training data case. In order the make the energy of the memory to be lowest, we can just set weight same sign as the product of two neurons connected.

This is the call Hebiann Learning rule

This is the call Hebiann Learning rule

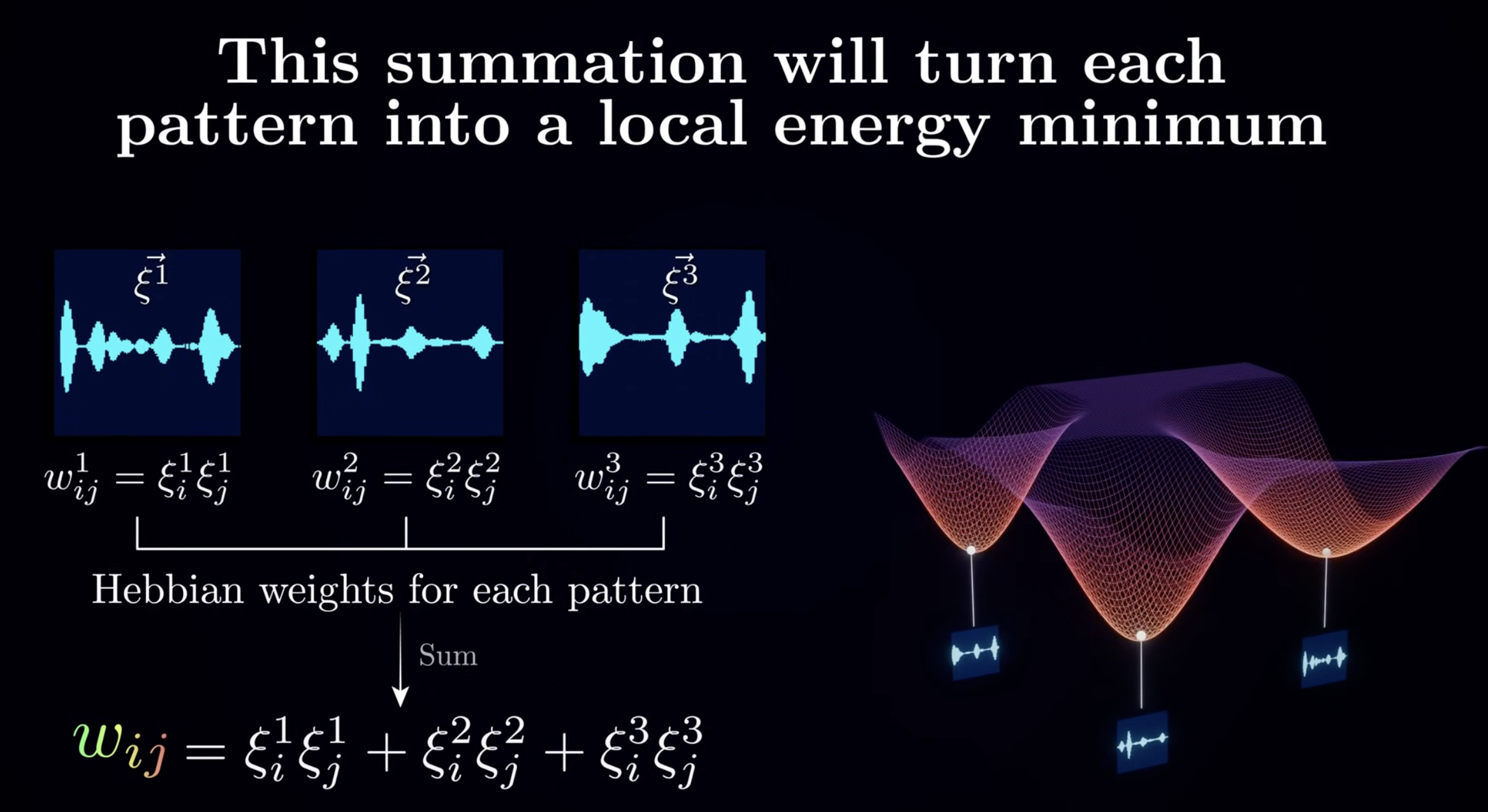

When we have more learning training data, the weight can be set as the summation of of all the weights

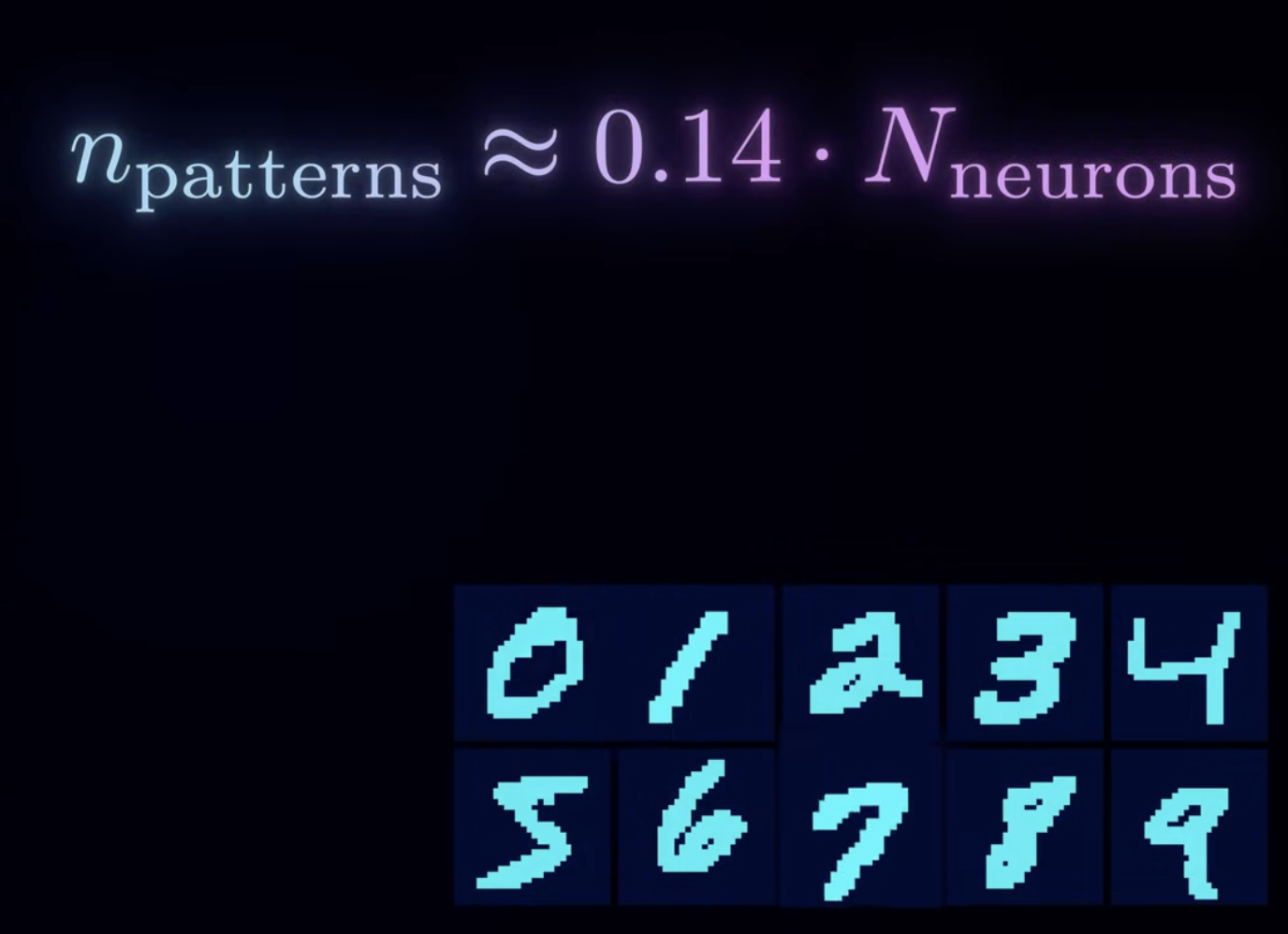

But each local minimum would interference with each other, thus the number of pattern Hopefield network can be trained on is limited to number of neurons

But each local minimum would interference with each other, thus the number of pattern Hopefield network can be trained on is limited to number of neurons

4 Future work

Hopfield has improved his work recently. and we will get into Boltzmann machine as another improvement by Dr Hinton.