SAM and BLIP

Segement Anything Model and Boostrap Lang-Image Pretraining

1 SAM

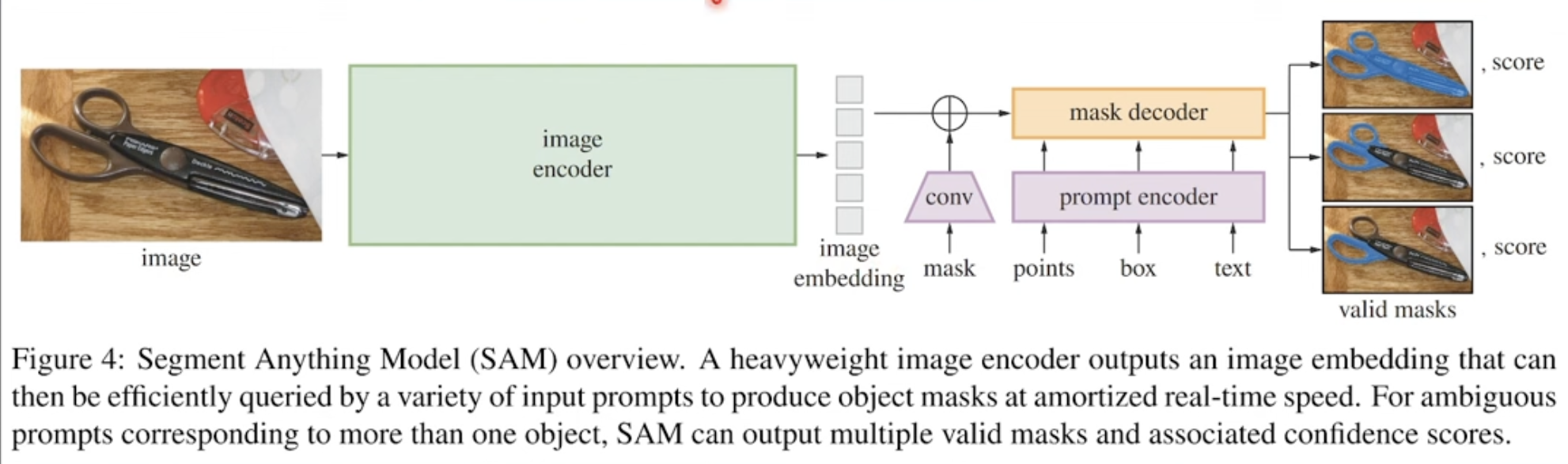

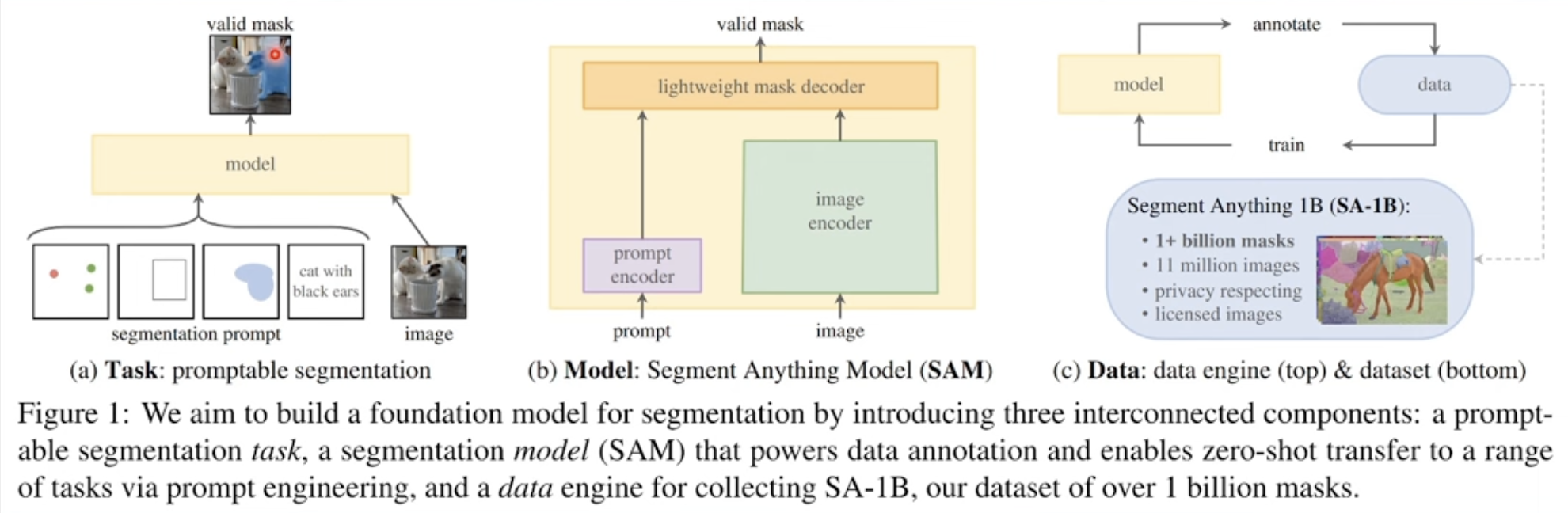

Meta published SAM for a very general segmentation task.

Couple of key technicals in SAM. SAM can take in various of input, points, box or text.

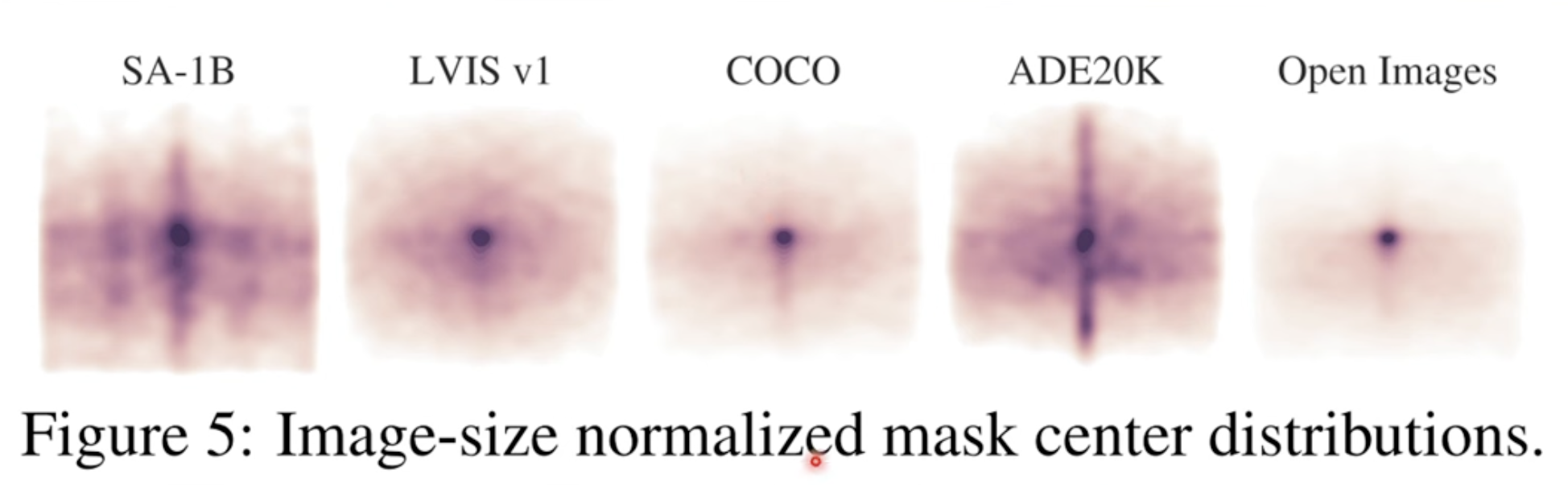

and use a SA-1B dataset which is high quality in segmentation and really large quantity.

and use a SA-1B dataset which is high quality in segmentation and really large quantity.

1B data is impossible to have human label them all. So a bootstrapping loop was used here

1B data is impossible to have human label them all. So a bootstrapping loop was used here

Putting everything together, we get the fundation model

Putting everything together, we get the fundation model

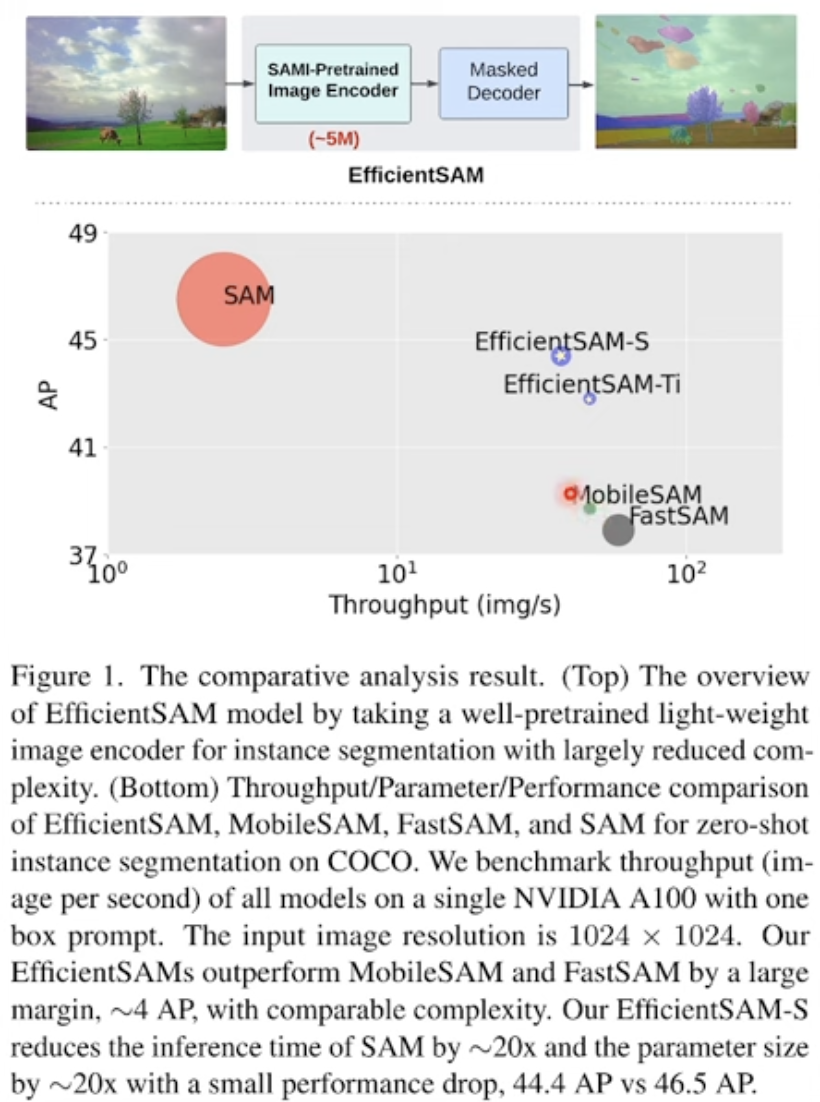

Efficient SAM was introduced later

Efficient SAM was introduced later

Sacrifice accuracy for speed

Sacrifice accuracy for speed

2 BLIP

What is Bootstrapping in AL

Let’s see how BLIP is using this technology.

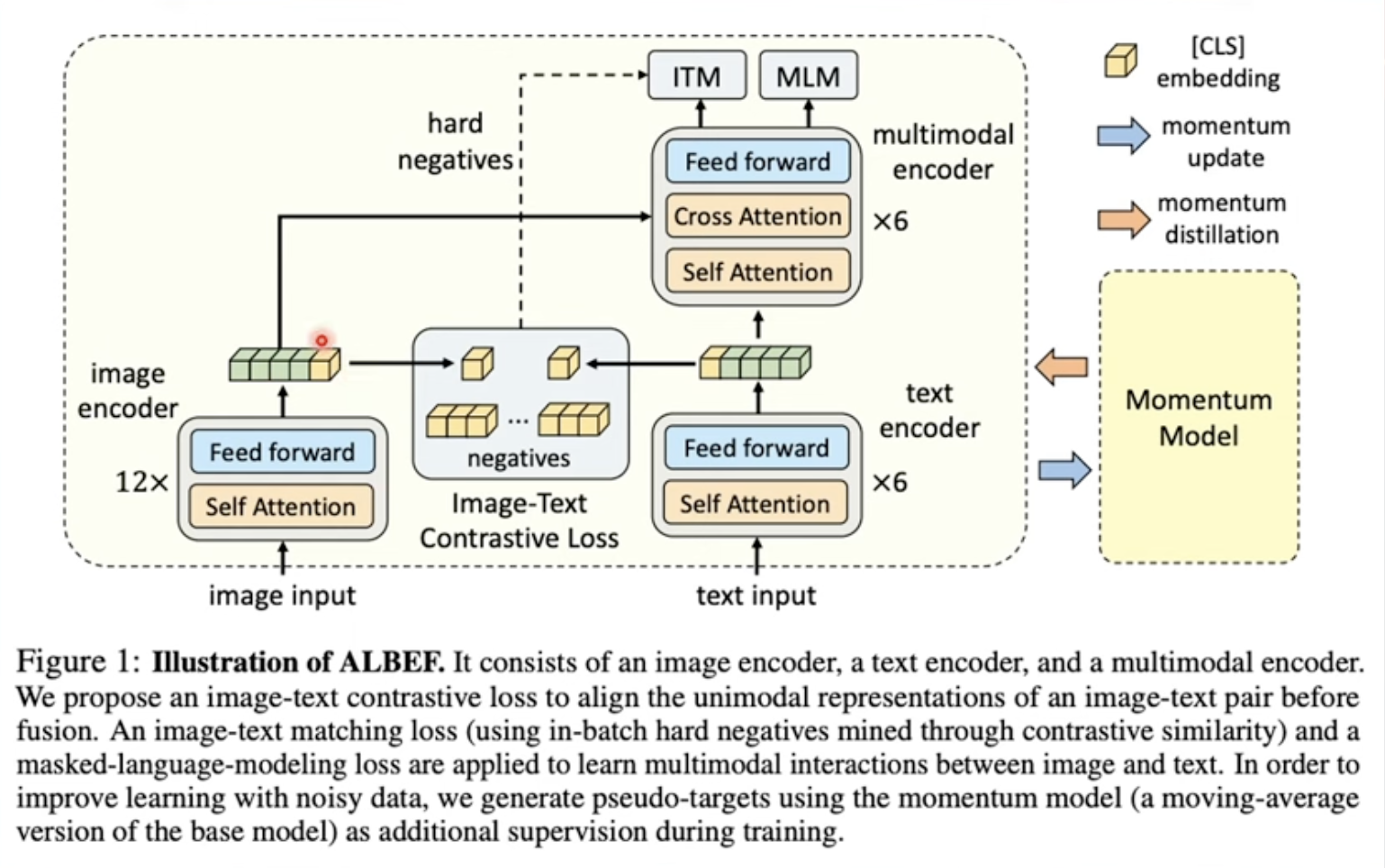

First take a look at ALBEF(

ALign the image and text representations BEfore Fusing)implementation.Doing contrastive learning as the alignment between text and image embeddings

First take a look at ALBEF(

ALign the image and text representations BEfore Fusing)implementation.Doing contrastive learning as the alignment between text and image embeddings

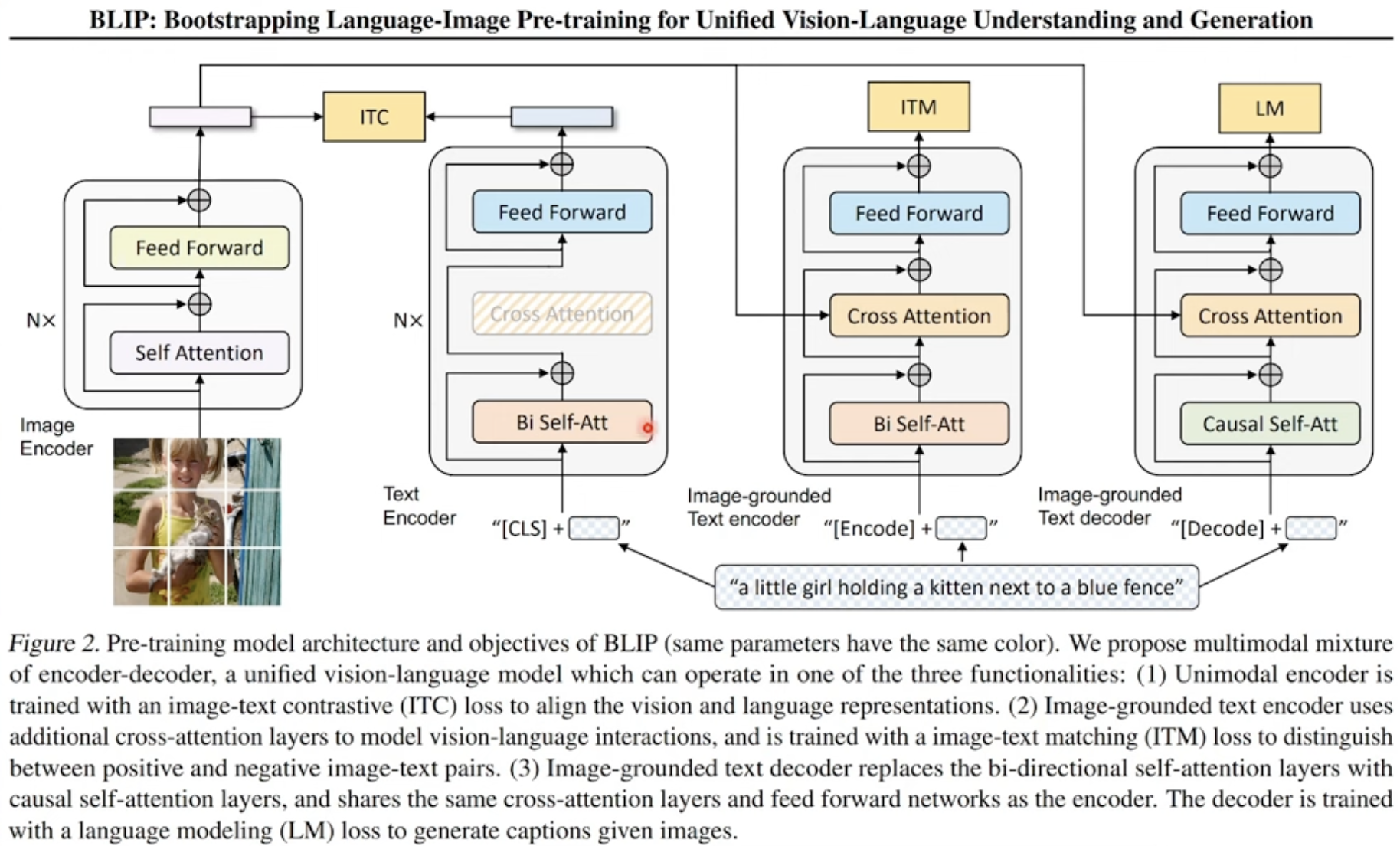

BLIP has ITC is very similar to ALBEF, but also adding ITM(matching) and LM to output the text for image

BLIP has ITC is very similar to ALBEF, but also adding ITM(matching) and LM to output the text for image

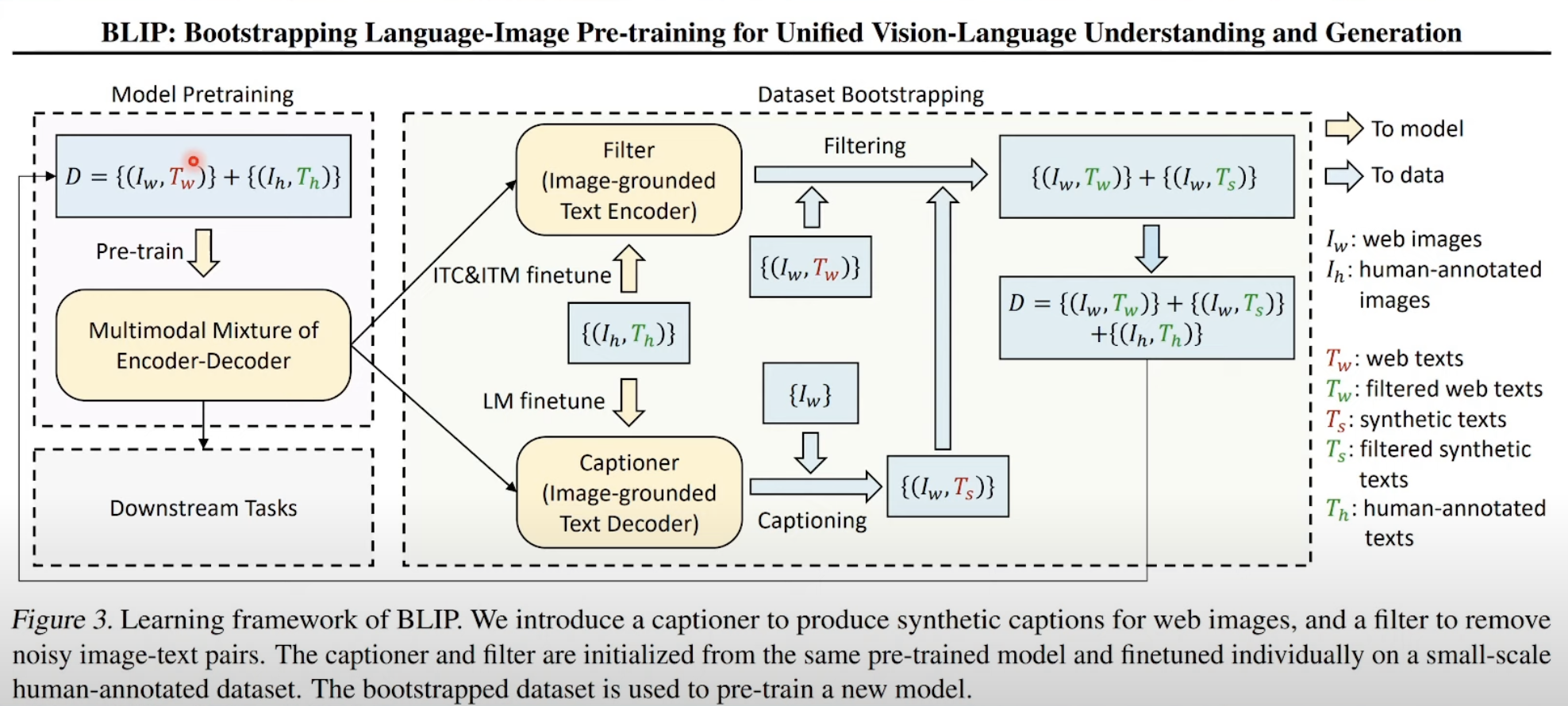

The training starts with internet data and small sets of human label as bootstrapping.

The training starts with internet data and small sets of human label as bootstrapping.

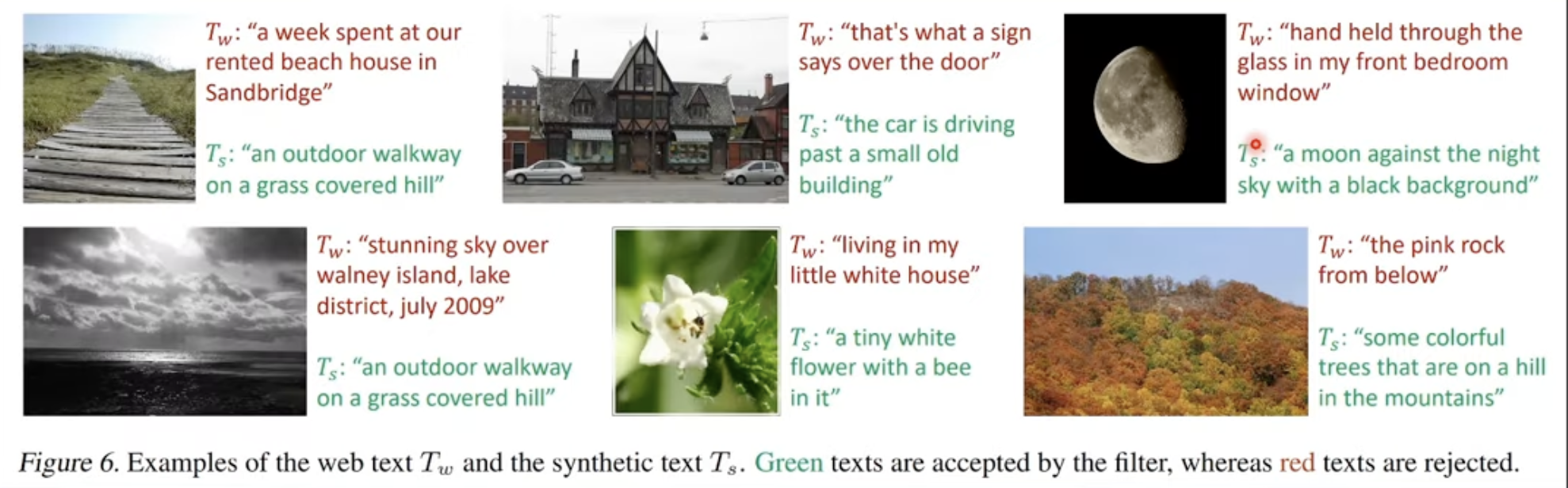

It can be used to correct title for a picture scrapping from internet $T_w$ with a synthetic $T_s$. The benefit is obvious, the previous title maybe irrelavant to the image but about personal feelings, and the corrected one is more useful for ML training.

It can be used to correct title for a picture scrapping from internet $T_w$ with a synthetic $T_s$. The benefit is obvious, the previous title maybe irrelavant to the image but about personal feelings, and the corrected one is more useful for ML training.

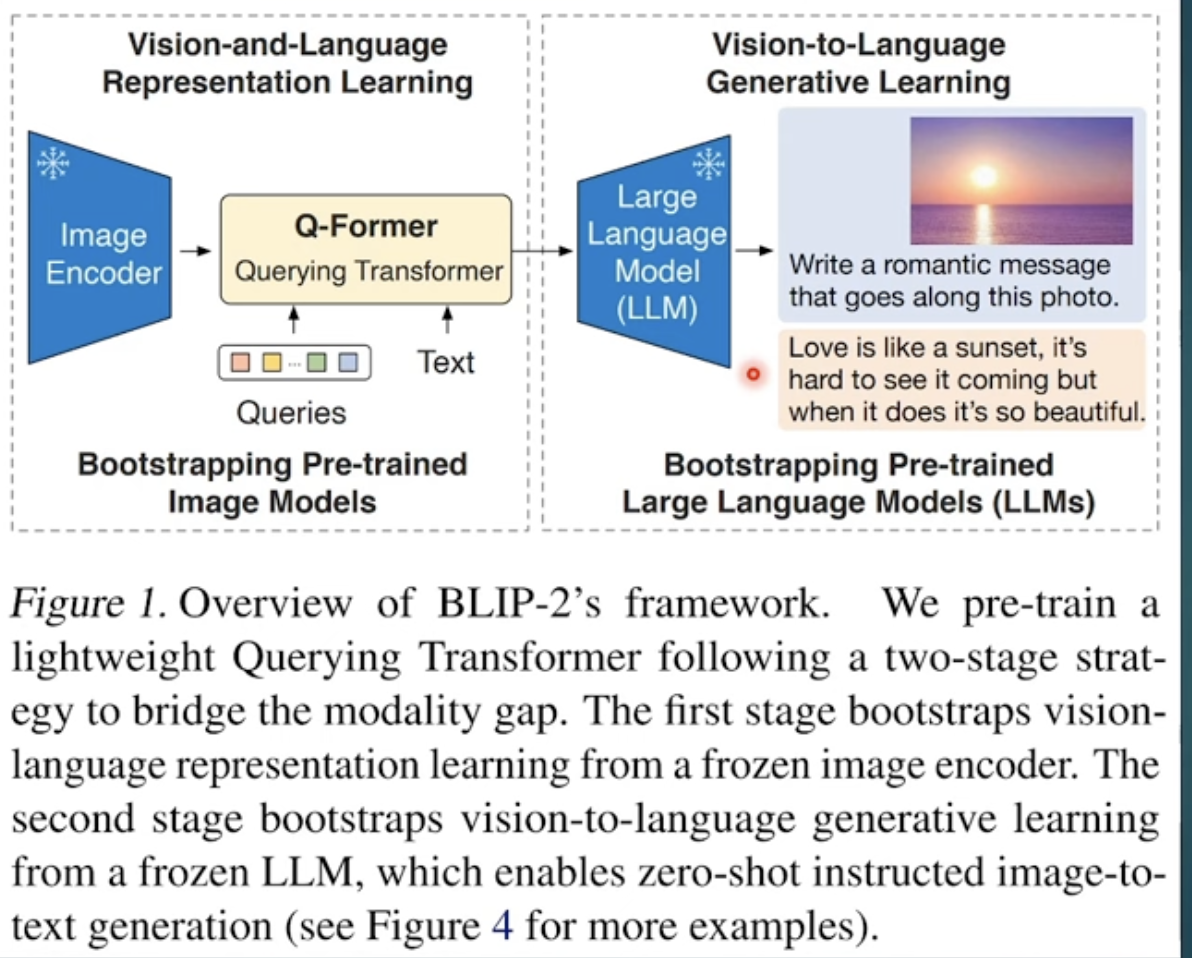

3 BLIP-2

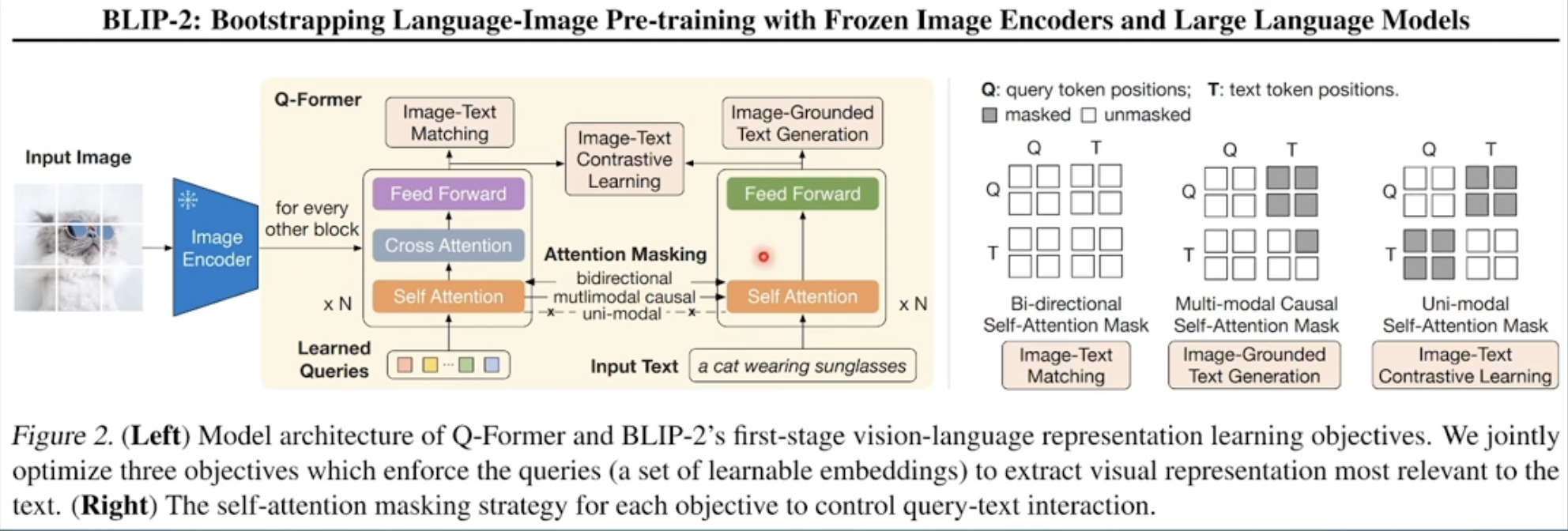

Querying Transformer, as Q-Former is the contribution of BLIP-2.

First stage for fixed image encoder

First stage for fixed image encoder

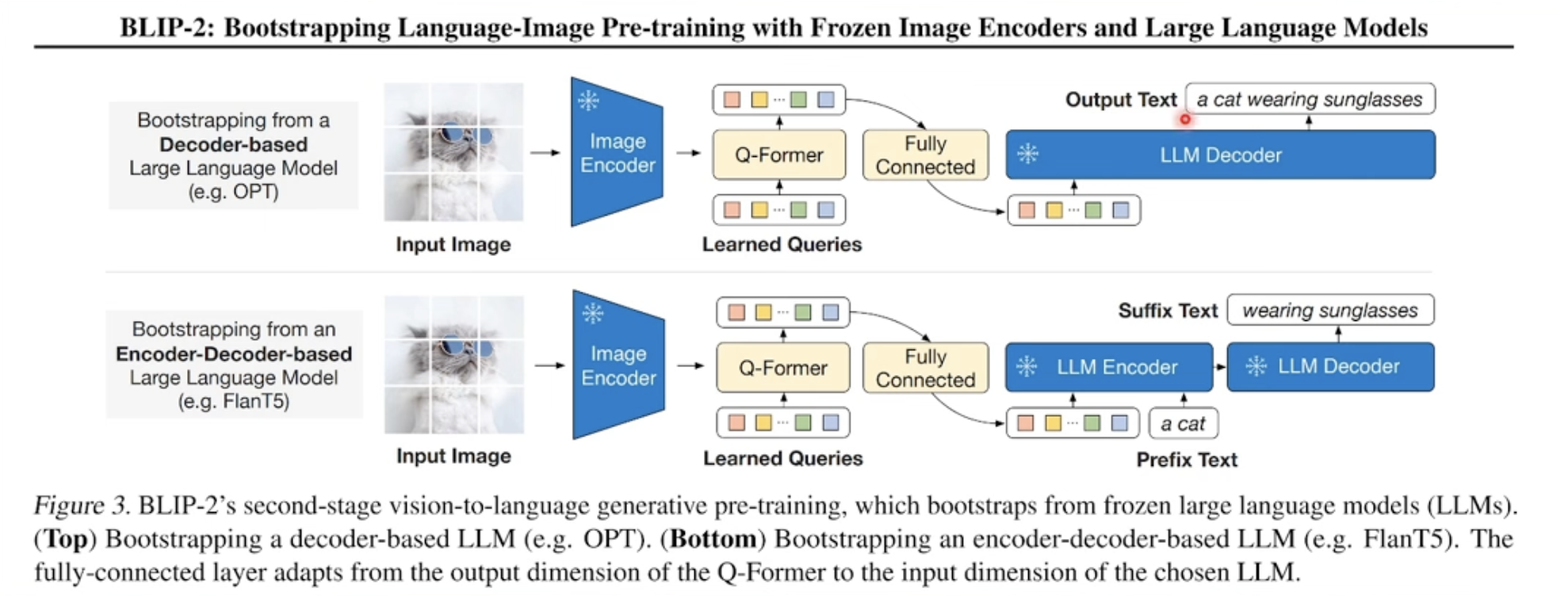

Second stage for fixed LLM

Second stage for fixed LLM

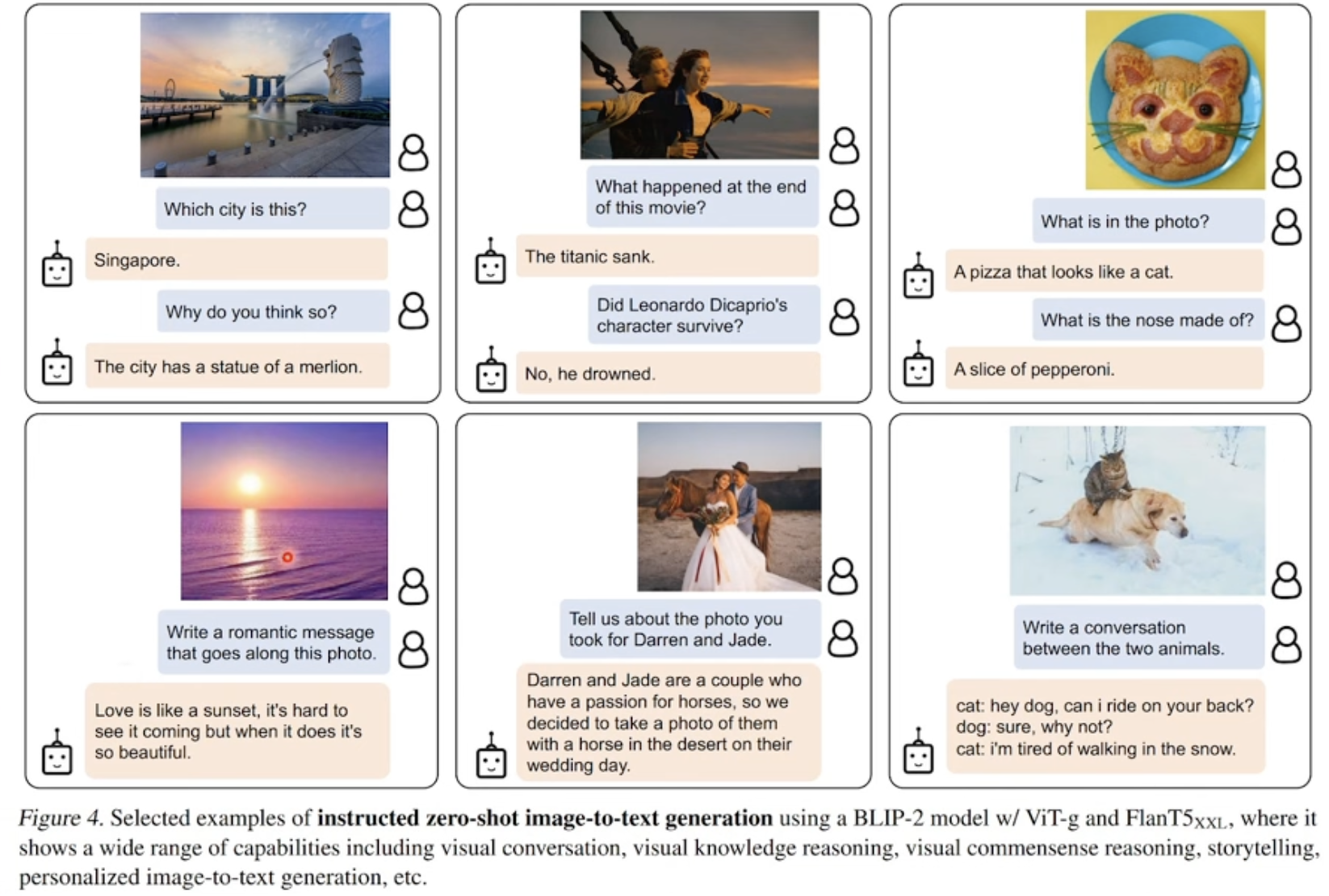

Here are the results of BLIP-2

Here are the results of BLIP-2