State Space Machine

Structured State Space for Sequence Modeliing S4 paper by Albert Gu, 2021

This is study note from this blog

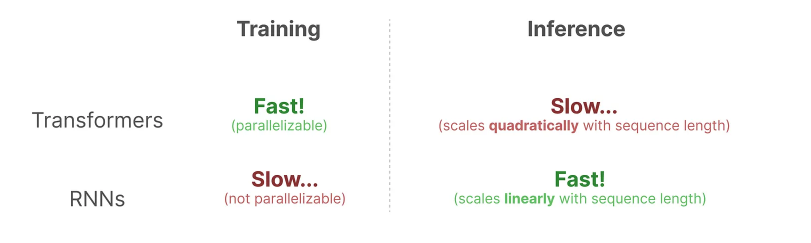

0 The problem

We propose SSM to solve the slow inference issue for Transformers

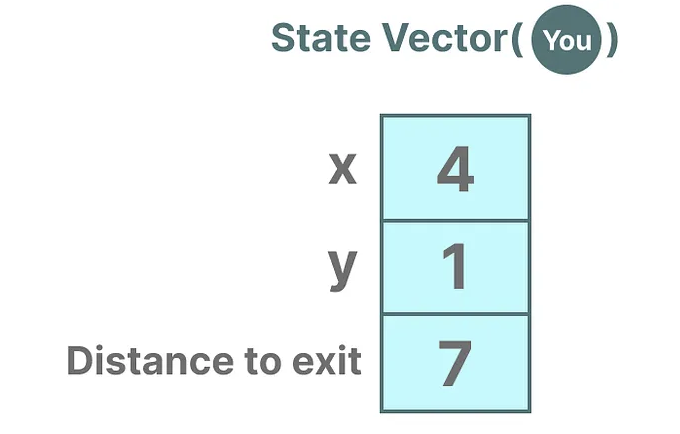

1 State Space

State Space is vector representation of a state.

In NN, state is typically hidden state, in LLM, it’s generating a new token.

In NN, state is typically hidden state, in LLM, it’s generating a new token.

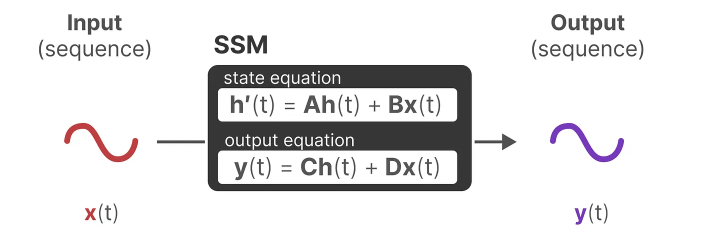

2 State Space Model (SSM)

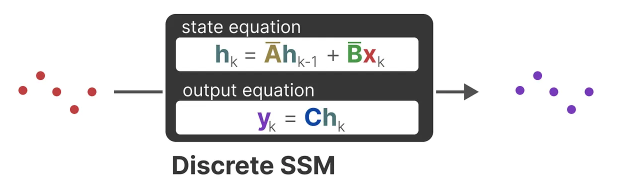

These two equations are the core of the State Space Model.

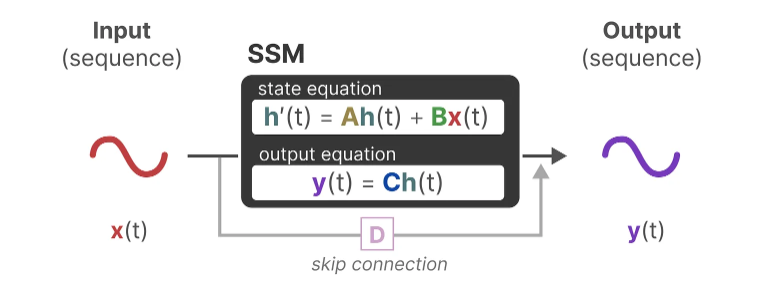

and can be simplied as below with skip connection for matrix D

and can be simplied as below with skip connection for matrix D

With discretization

With discretization

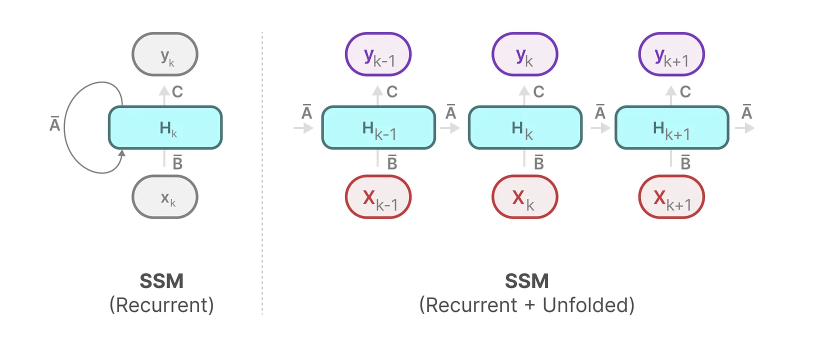

3 RNN and CNN representation

It’s very similar to RNN

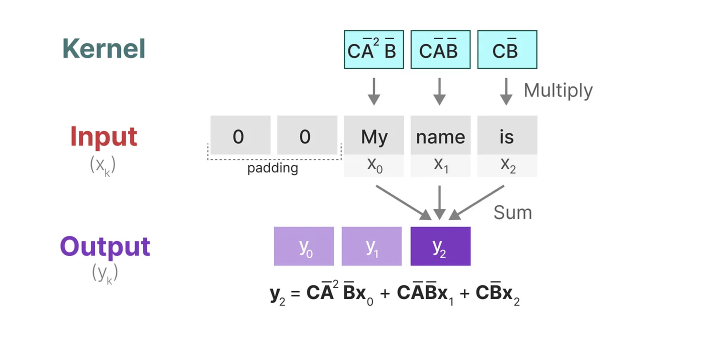

and can use represented by CNN as well.

So 1D CNN for LLM with 1D kernel.

and can use represented by CNN as well.

So 1D CNN for LLM with 1D kernel.

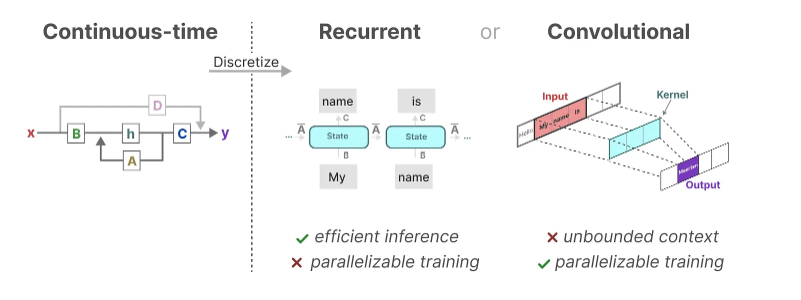

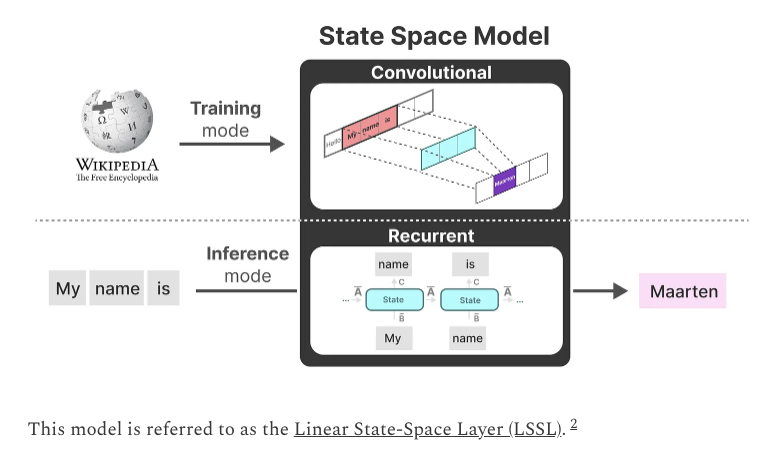

Now we have three representations

Combine the fast training for CNN and fast inference for RNN, we have Linear State Space Layer.

Combine the fast training for CNN and fast inference for RNN, we have Linear State Space Layer.

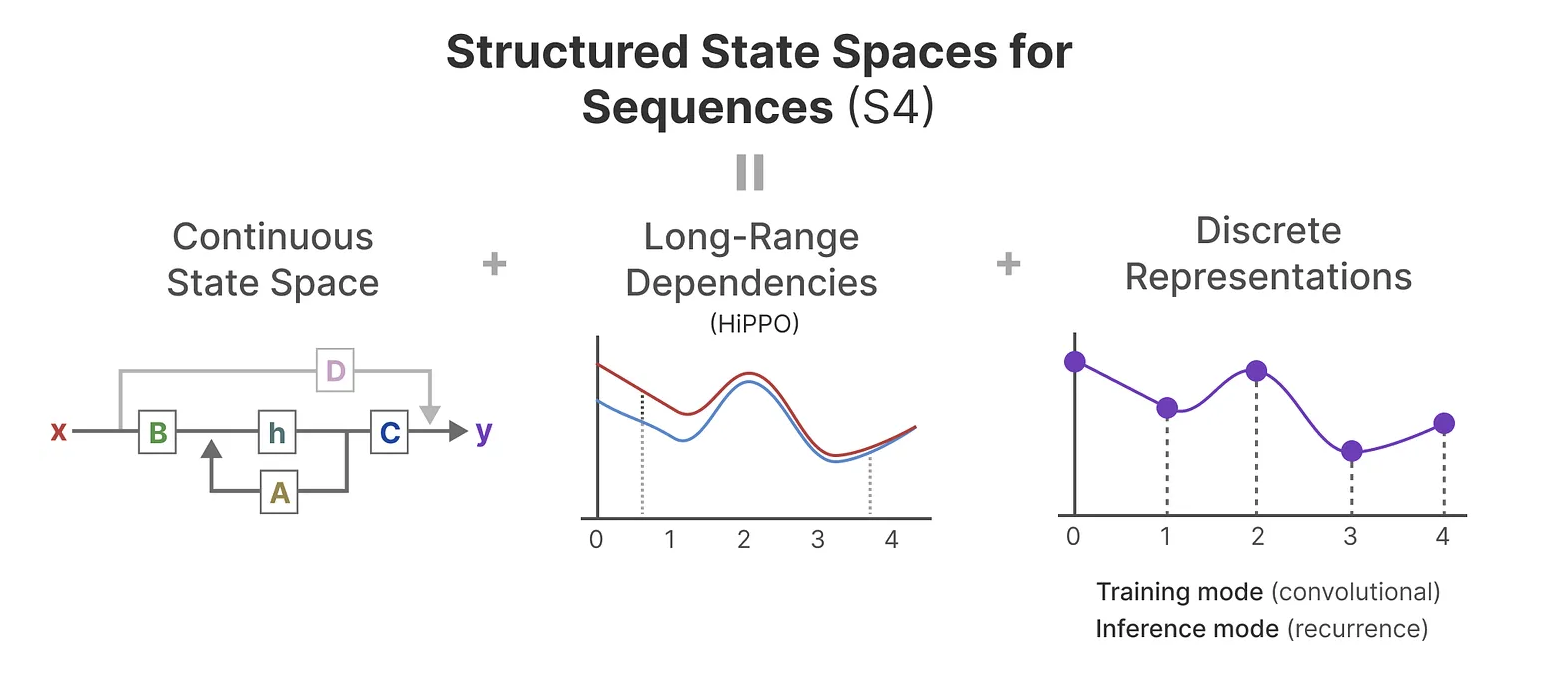

4 HiPPO and S4

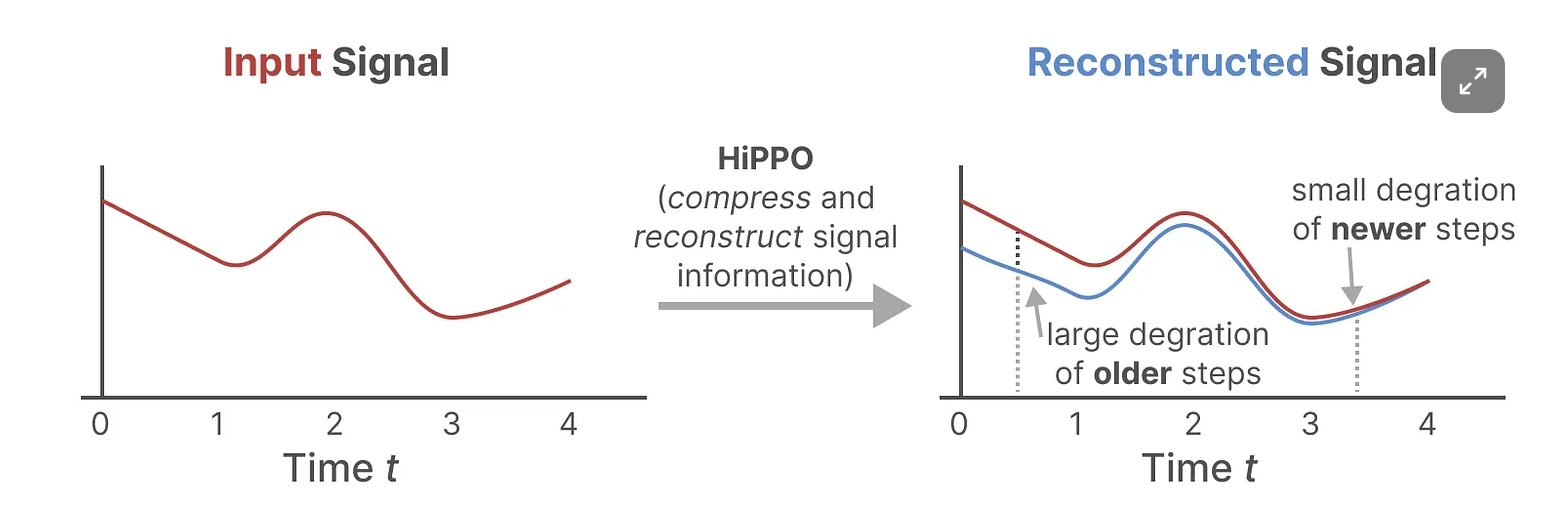

In order to rememeber long context, HiPPO was introduced here. The core idea is focus on near term memory but also have inifinte long term memory w large degration.

Apply HiPPO to SSM, we have S4

Will discuss more details in the next blog.

5 H3

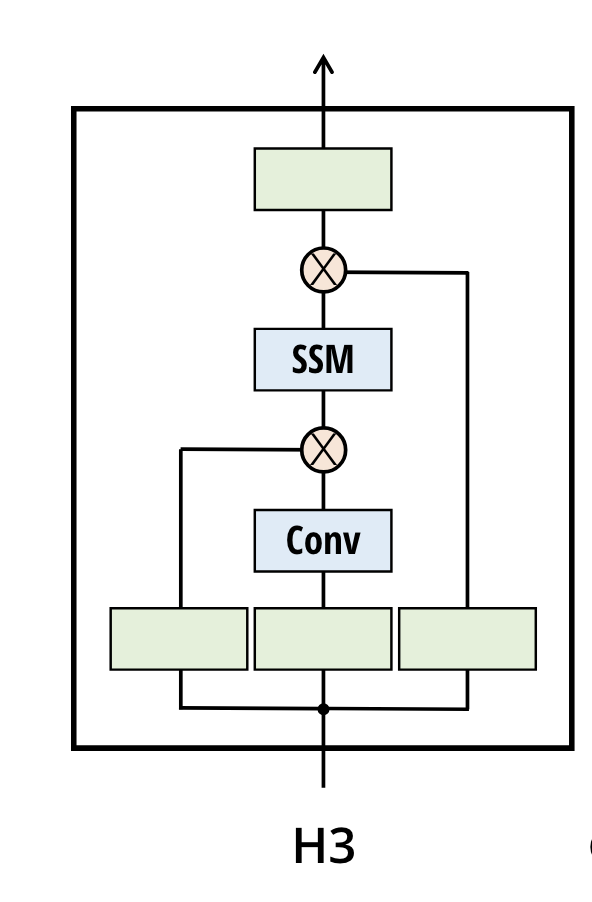

H3 (Hunry, Hunry HiPPO) is SSM sandwiched by two gated connections. It also inserts a standard local convolution, which they frame as a shift-SSM, before the main SSM layer.

H3 is later envolved into Mamba. and there are some other architectures like Hyena,RWKV(Receptance Weighted Key Value) are all based on SSM.