LLM Scores Pass@k to Perplexity

Some details about LLM measuring scores

1. pass@k

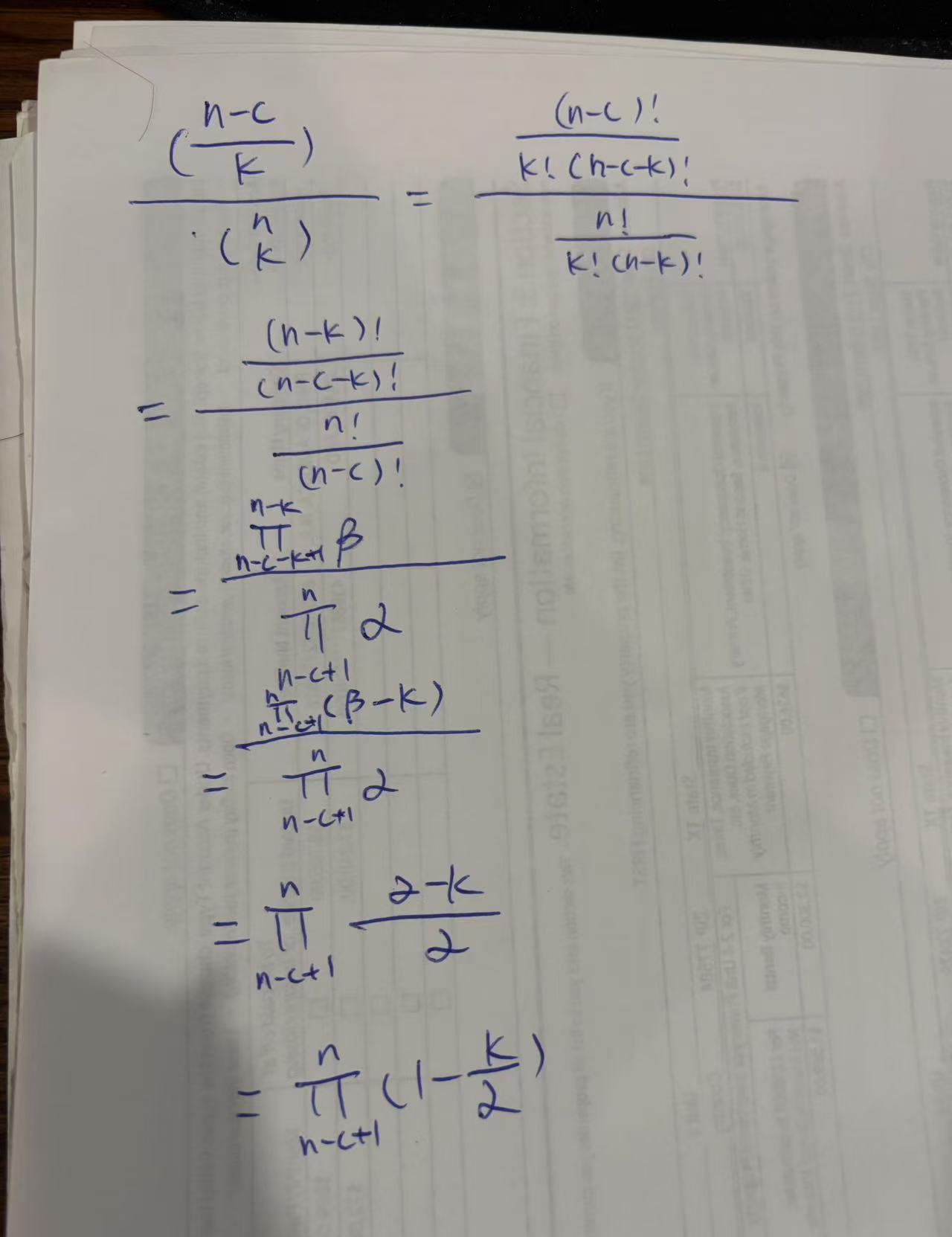

OpenAI proposed pass@k in this paper based on Kulal’s work. The idea is the generates k code solutions per problem and a problem is deemed solved if any of the sample passes the tests;the fraction of solved problems is reported. \(Pass@k=E(1-\frac{n-c\choose k}{n\choose k})\) Basically it calculate the prob. of None of k choices were correct first and get the compliment part of it. The HF implementation is here

def estimator(n: int, c: int, k: int) -> float:

"""Calculates 1 - comb(n - c, k) / comb(n, k)."""

if n - c < k:

return 1.0

return 1.0 - np.prod(1.0 - k / np.arange(n - c + 1, n + 1))

It’s based on the numerical stable solution proposed in the paper, to avoid large numebr calculation for $n\choose k$ and $n-c\choose k$.

2 BLEU score

BLEU(Bilingual Evaluation Understudy) is a way to measure ML predictions on language

For example:

Candidate: Today is Sunny

Refer: Today the weather is Sunny

- Precision measure

Prec = 3/3 = 1

Recall = 3/5 = 0.6 - N-gram

for 2-gram,we have

Prec = 1/2 ([Today is] and [is Sunny])

Recall = 1/4([Today the][the weather][weather is][is Sunny]) - Geometric mean

Get the mean for various n-gram precision (usual 1-4) \(BLEU=\prod_1^N{p_n}^{w_n}\) - Brevity Penalty

For short answers like “Today”, the 1-gram precision is 1. So we take a penalty \(BP=\begin{cases} 1 & \text{if } c > r \\ e^{(1-r/c)} & \text{if } c < r \\ \end{cases}\)

3 ROUGE score

ROUGE(Recall-Oriented Understudy for Gisting Evaluation) is bascially the F1 score for lanuage matching.

- ROUGE-1 is unigrams w prec=1 and recall=0.6, so the F1 is $210.6/(1+0.6)=0.75$

- ROUGE-2 is bigrams w prec=0.5 and recall=0.25, so the F1 is $20.50.25/(0.5+0.25)=0.33$

- ROUGE-L is the calculated based on the LCS(Longest Common Subsequence). Huh, the famouse leedcode problem can be used here!

4 Perplexity

Giving a language model, the probability of the sentence W, which is “a red fox.” is define as:

- $P(W) = P(“a\ red\ fox.”) =

P(“a”) * P(“red” | “a”) * P(“fox” | “a\ red”) * P(“.” | “a\ red\ fox”)$ - We normalize it by geometrical mean.

$Pnorm(W) = P(W) ^ {1 / n}$ - perplexity is just the reciprocal of this number.

$PP(W) = 1 / Pnorm(W)$ - Perplexity can also be derived from entropy H(w).

$PP(W) = 2 ^ {H(W)}$