Deepseek Conditional Memory Engram

N-Gram is coming back to life with this paper. There is already a detailed code explanation version of Engram, but I may defer to read it at a later time. Here is high level overviews

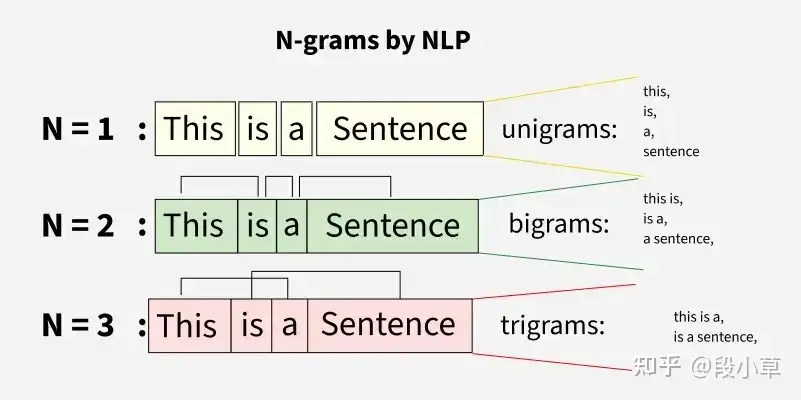

0 N-gram

- N-gram is good at catching local and fixed texture pattern.

- But it’s bad at long distance dependency and data sparsity issues.

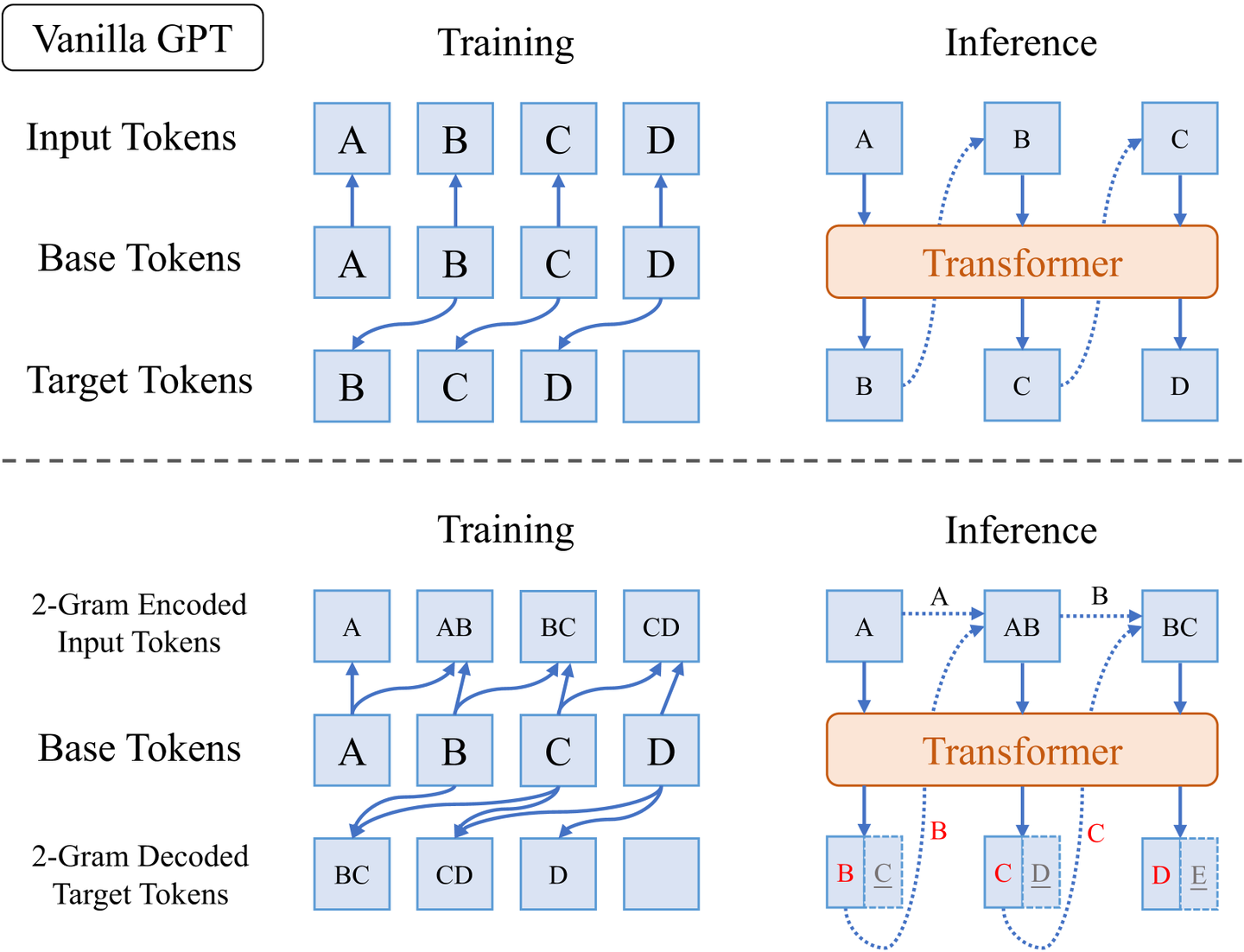

1 Over Tokenized Transformer

This is from ByteDance’s seed team, decoupling input and output vocabularies to improve language modeling performance.

Over Encoding + Over Decoding (MTP) = Over Tokennized Transformer

Over Encoding + Over Decoding (MTP) = Over Tokennized Transformer